New ASHRAE Criteria Puts The Efficiency Drive To Test

Data centers and data center HVAC systems are mission-critical, energy-intensive infrastructures that run 24 hours a day, seven days a week. They provide computing services that are critical to the everyday operations of the world’s most prestigious economic, scientific, and technological institutions. The amount of energy utilized by these centers is expected to be 3% of total global electricity consumption, with a 4.4% annual growth rate. Naturally, this has a huge economic, environmental, and performance impact, making cooling system energy efficiency one of the top priorities for data center designers, ahead of traditional issues like availability and security.

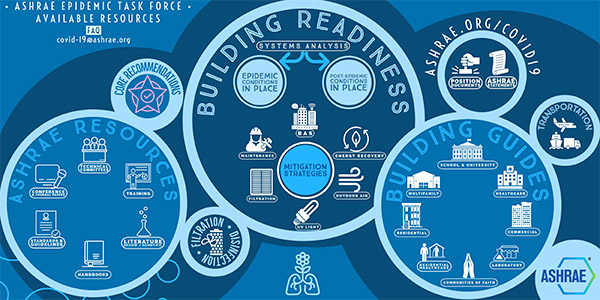

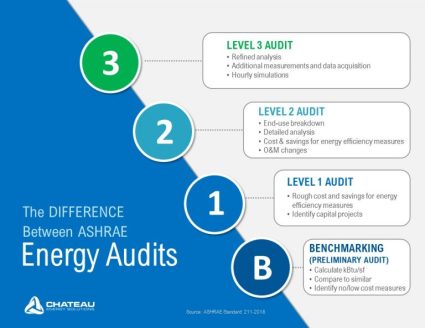

The Right Standards To Follow

New Energy Efficiency Standard ASHRAE 90.4

Data Center Efficient Design For Energy Consumption

- Environmental conditions are used to determine where data centers should be located.

- Infrastructure topology influences design decisions.

- Adapting the most effective cooling strategies.

- Air Conditioners And Air Handlers

- Hot Aisle/Cold Aisle

Hot/Cold Aisles Containment

Liquid Cooling

Green Cooling

The Capacity of High-Density IT Systems

ASHRAE For New Data Center Model

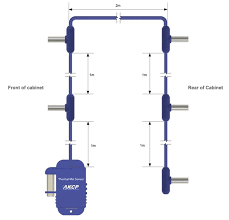

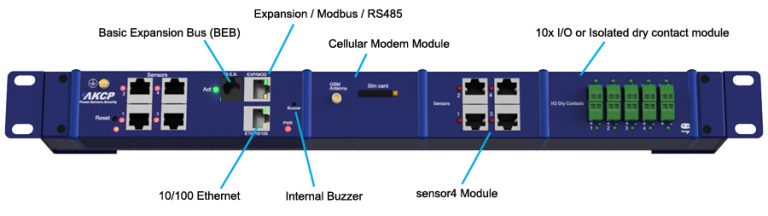

AKCP Monitoring The ASHRAE Standard

In monitoring the data center and server rooms as per the ASHRAE Standards, recommends that there should be three sensors per rack. Mounted at the top, middle, and bottom of the rack to more effectively monitor the surrounding temperature levels.

For hot or cold aisles, the use of a sensor at the back of the cabinet could provide valuable insights. This can dictate the most effective airflow containment strategies for each aisle. Finally, if managers want to further reduce downtime and improve efficiencies, they should also track rack cabinet exhaust metrics, server temperatures, and internal temperatures. The latter readings can help response personnel more effectively address issues in real-time before they cause a costly outage.

As part of ASHRAE best practices, data center managers should also monitor humidity levels as well. Just as high temperatures can increase the risk of downtime, so too can high humidity create increased levels of condensation and thus a higher risk of electrical shorts. Conversely, when humidity levels drop low, data centers are more likely to experience Electrostatic Discharge (ESD). To mitigate the latter issues, ASHRAE best practices state that managers should avoid uncontrolled temperature increases that lead to excessive humidity levels. Humidity levels within server rooms and across the data centers should stay between 40% and 60% Relative Humidity (RH). The latter range will help to prevent ESD, lower the risk of corrosion, and prolong the life expectancy of equipment.

AKCP Thermal Map Sensor

Thermal Maps are easy to install, come pre-wired, and are ready to mount with magnetic, cable ties, or ultra-high bond adhesive tape to hold them in position on your cabinet. Mount each sensor on the front and rear doors of your perforated cabinet so they are exposed directly to the airflow in and out of the rack. On sealed cabinets, they can still be mounted on the inside and give the same monitoring of temperature differential between front and rear, and ensure that airflow is distributed across the cabinet.

Monitor up to 16 cabinets from a single IP address. With a 16 sensor port SPX+, you consume only 1U of rack space and save costs by having only one base unit. Thermal map sensors can be extended up to 15 meters from the SPX+.