One of the most important things to consider in running a data center is airflow management. Cooling air accounts for around 24% of the annual operating budget of a data center. In simple terms , for maximum efficiency, the servers should take the cold supply air exhausting it to return to the CRAC units. However, this statement does not appear to be easy as it seems. A lot of data centers still struggle to achieve proper airflow.

In many cases, data center administrators think that adding more cooling will counter excessive heat. Even if the cooling units are set at the peak of their capacity, this is often not a feasible solution. Although it helps the data center to cool down, it also causes the electricity bills to blow up. A well-considered airflow plan minimizes this cost and achieves optimum efficiency of the data center.

Thinking about how to manage data center airflow? Going through this article will further elaborate on it.

The Ideal Data Center Airflow Management Plan

Photo Credit: searchdatacenter.techtarget.com

Datacenter airflow management planning is the process of clearing paths for hot and cold air, including monitoring the temperatures in these regions. A primary concern is preventing the hot and cold air from mixing, being stranded by obstructions, or bypassing the IT equipment. ASHRAE recommends a range between 20°C (68°F) to 25°C (77°F) inlet air temperatures. Maintaining this creates an optimum working condition for the equipment.

The ideal data center airflow management plan segments the room into four major areas. These are called the 4R’s of airflow management, which consists of the following:

- Raised Floor – the perforated tiles are placed in the cold aisle. The standard size is 6″ x 18”. It is an aluminum or Lexan insert allowing the airflow distribution from under a raised floor. Additionally, the openings where the cables or pipes are positioned should be sealed. This ensures that the supply air won’t leak to these openings and come out only in front of the servers.

- Racks – the servers need to be placed on the racks ensuring just the right space for air circulation. The unused space on and between the racks should be filled in with blanking panels. This prevents the air from passing through the gaps preventing the mixing of hot and cold air.

- Rows – The racks are to be arranged in a row according to the hot and cold aisle layout. Adding a containment system will further improve this method. The placement of rows is based on the space availability while considering the existing rows. Additionally, each row should maintain a maximum of 10 racks.

- Room – The room temperature ensures that the data center is operating in full efficiency. Un-regulated temperature can put the data center at risk of equipment failure. This can cause downtime, which can cause the data center millions of dollars. More than this cost, it can make the business completely end its operations.

Hot and Cold Aisle Layout

Hot and Cold Aisle Layout is one of the earliest airflow management solutions in the industry. In this configuration, the racks are arranged in a row facing each other. The backs of the racks likewise face the back of the next one. Almost 80% of the data centers are implementing this solution today. However, it should be noted that this solution is not applicable for servers with solid Plexiglass or glass doors. Perforated doors are recommended instead.

Hot and Cold Aisle Containment

If the racks are arranged facing the same direction, the air temperature will rise as it passes through the parallel rows. The exhaust air from the preceding row of racks will be drawn into the “cool” air intakes. More than the improper distribution of airflow, this also causes significant energy losses.

Retrofitting the data center into a hot and cold aisle layout requires well-thought consideration including the costs. The data center may experience downtime during this process which can cost up to $5,600 per minute for large data centers. Nonetheless, implementing this solution will guarantee a timely Return on Investment (ROI).

Containment System

Another solution is modifying the aisle layout into a containment system. This includes the closing of either the hot or cold aisle through a physical barrier. This way, the delivery of air within the aisles can be further improved. It can help in minimizing the temperature differential on the server inlet. Furthermore, it mitigates hotspots that are most common on uncontained data centers.

There are two ways of aisle containment. As the name suggests, the hot aisle containment encloses the hot aisle. This system is more expensive to implement yet proven to be more effective. Companies who implemented this indicated generating ROI in as early as three years. The latter- cold aisle containment isolates the cold air using doors and ceiling panels. The existing data centers can be easily retrofitted into this design without heavy reconstruction.

Data centers that are not implementing containment systems are spending a huge amount of utility costs. A recent study shows that data centers without an effective containment system essentially waste more than 50 percent of energy on unnecessary consumption. While another study shows that hot aisle containment saved up to 40% of the annual cooling cost. This results in a 13% reduction in annualized PUE.

Photo Credit: www.upsite.com

Using Economizer/ Free Cooling

With the rising energy cost, data centers started to adopt free cooling into their strategy. It is the process of using outdoor cool air or water to lower the air temperature. The main advantage of free cooling is evident. Data centers get to utilize the same benefit they take from the powered cooling units for free. Although this can only work in specific climates, it is a very energy-efficient form of data center cooling.

The water-filled cooling coils absorb the heat exhausted by the servers. Air is filtered and dehumidified for the HVAC system to return a new, cooler air from the outside. Then servers can retake this air for the cooling process. Some data centers also deploy cooling towers. These are used to facilitate evaporations and move extreme heat to the outside atmosphere. Another option is the source of cold water from local rivers, lakes, or oceans can be circulated to the data center to cool it.

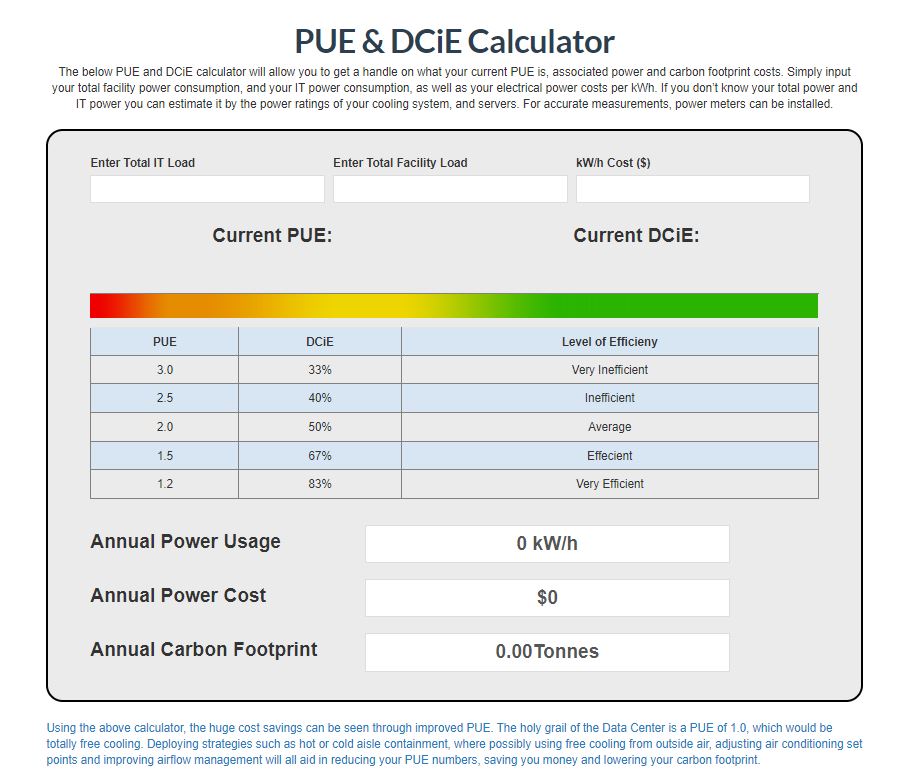

Measuring the PUE

Knowing the cooling efficiency of the data center can be checked by measuring the Power Usage Effectiveness (PUE). It reflects the amount of energy that the IT equipment used with total energy entering the data center. This energy includes electricity and natural gas, fuel, water, and air used in free cooling.

PUE can be calculated using the formula:

PUE = the total facility energy used by IT equipment.

An ideal PUE is 1.0. Data centers between 2.0 and 3.0 mean they are inefficient. Industry leaders such as Google and Microsoft are operating data centers with a PUE of 1.2. Facebook surpassed this having a PUE of 1.07 on its Open Compute flagship data center in Prineville, Oregon. However, the remaining ones run only at an average PUE of 2.5.

TRY OUR FREE ONLINE PUE CALCULATOR

AKCP Free PUE Calculator

Computational Fluid Dynamics

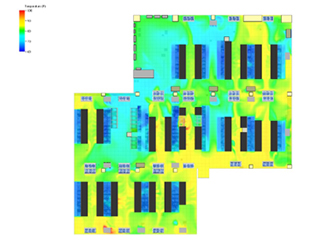

Computational Fluid Dynamics (CFD) is software that helps administrators in managing the data center airflow. Its accuracy as an analytical model is based on ever-higher computational capacity and speed. Data visualization is through 3D graphics, which makes it easier to interpret.

The first step in implementing a cooling strategy is knowing what causes all the problems. CFD provides a detailed simulation of the airflow pattern and differential pressure to identify the key problem on existing data centers. It also proposed the configurations before they are built. This makes it useful in understanding the impact of the changes. Therefore, necessary adjustments can be made on time prior to actual implementation. AKCP sensorCFD™ is an improvement on traditional CFD. Instead of using arbitrary figures for the rack power, heat, airflow, we use real sensor data to constrain the CFD model.

Airflow Monitoring in Data Center

Airflow controls static electricity and dust build-up on computer fans that can cause equipment crashes. Airflow is one of the most important factors of cooling your data system. Air conditioning alone won’t be enough to protect your data center. Airflow sensors can detect the amount of air arriving via a floor-located conduit for cooling purposes, for example, ensuring that equipment such as networking cables are not blocking the conduit and choking off the chilling supply. Airflow sensors can also be deployed to ensure that hot air returns are similarly free from obstruction.

AKCP Airflow Sensors

AKCP airflow sensor gives the assurance that the system will run at peak efficiency and that you will be notified immediately of any drop in airflow. Larger rooms often require additional sensor information to determine potential problems with cooling efficiency. It’s important to measure the rate of airflow to gauge the overall health of the environment.

The AKCP airflow sensor is designed for systems that generate heat in the course of their operation and a steady flow of air is necessary to dissipate this heat generated. System reliability and safety could be jeopardized if this cooling airflow stops.

The Airflow sensor is placed in the path of the air stream, where the user can monitor the status of the flowing air. The airflow sensor is not a precision measuring instrument. This device is meant to measure the presence or the absence of airflow.

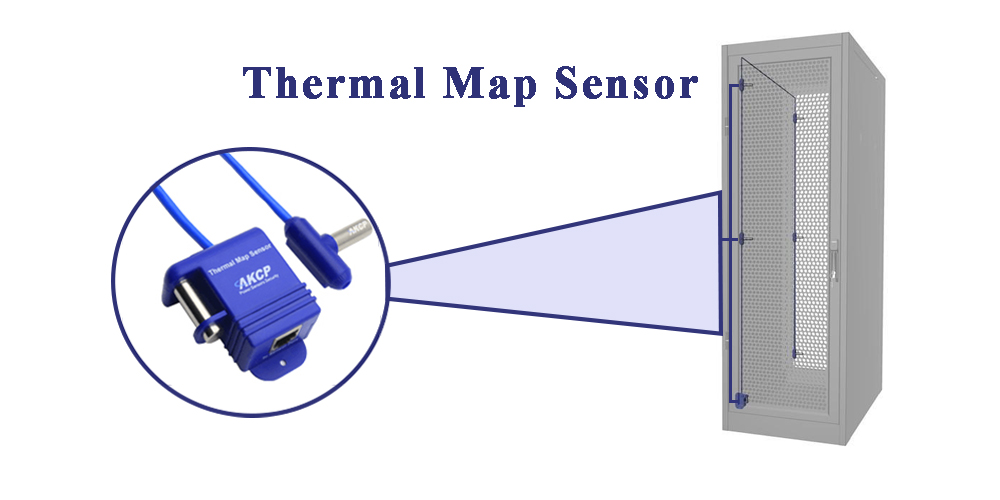

Temperature Monitoring With Thermal Map Sensors

Obstructions within the cabinet

Cabling or other obstructions can impede the flow of air causing high-temperature differentials between the inlet and outlet temperatures. The cabinet analysis sensor with pressure differential can also help analyze airflow issues.

Server and cooling fan failures

As fans age, or fail, the airflow over the IT equipment will lessen. This leads to higher temperature differentials between the front and rear.

Insufficient pressure differential to pull air through the cabinet

AKCP Thermal Map Sensor

When there is an insufficient pressure differential between the front and rear of the cabinet, airflow will be less. The less cold air flowing through the cabinet, the higher the temperature differential front to rear will become.

Power Usage Effectiveness (PUE)

When the data is combined with the power consumption from the in-line power meter you can safely make adjustments in the data center cooling systems, without compromising your equipment, while instantly seeing the changes in your PUE numbers.

Sensors designed to support ASHRAE temperature and humidity controls can be installed within the rack, at the top, middle and bottom. Another can be installed at the back of the rack to measure containment, and this configuration can be repeated every fourth rack.

Thermal map sensors connect to AKCP sensorProbe+ base units. Extendable up to a maximum of 15 meters cable length, you can monitor multiple cabinets from a single IP address. Up to 16 thermal maps can be connected to a single SPX+.

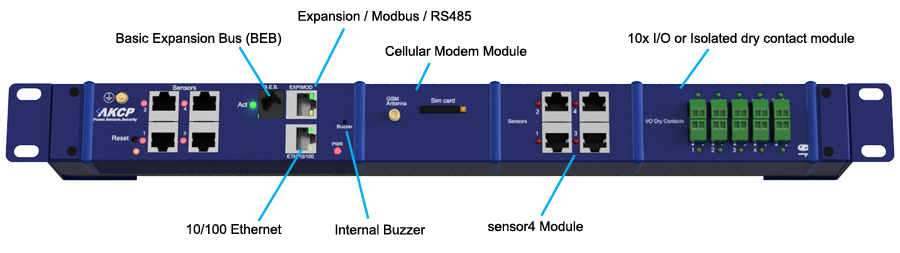

sensorProbeX+

The latest generation of sensorProbe devices, in a form factor that allows for 1U, 0U, and DIN rail mounting. A low-profile design that is economical on cabinet space. sensorProbeX+ comes in several standard configurations or can be customized by choosing from a variety of modules such as dry contact inputs, IO’s, internal modem, analog to digital converters, internal UPS, and additional sensor ports.

- Every sensorProbeX+ is equipped with Ethernet, Modbus RS485, EXP, and BEB communications

AKCP SensorProbeX+

- Compatible with sensorProbeX+ EXP and BEB units, expand the capabilities of your device.

- 1U rackmount brackets, Tool-less 0U mounting, or DIN rail mounting options.

- Notification by SNMP, Email, SMS (requires optional cellular modem), built-in buzzer.

- Compatible with a wide range of AKCP Intelligent sensors.

- Start with base configuration and build up your device with the modules you need.

- Up to 80 virtual sensors.

Conclusion

As the IT loads rise every year, running the traditional data center untouched is no longer practical. Over the years, several solutions have been introduced to manage airflow effectively. The implementation is based on the data center design, goals set, and financial availability.

The goal of the data center airflow management plan is universal– ensuring proper air temperature and distribution of airflow. Knowing these parameters helps assess the effectiveness of the plan. Environmental sensors are connected to the monitoring system to capture real-time data. These numbers are the variables used in computing the PUE. The CFD also comes up with an airflow model based on this data. An automated system sends an alarm if the parameters rise or fall beyond the intended state. This allows the administration to take action before damaging the critical space.

Start investing, message AKCP now at [email protected]

Reference Links:

https://its.uark.edu/about/policies/data-center/racks-rows-guidelines.php

https://dataspan.com/blog/stop-complicating-data-center-airflow-management/

https://techbeacon.com/enterprise-it/best-ways-manage-data-center-airflow

https://www.missioncriticalmagazine.com/ext/resources/MC/Home/Files/PDFs/WP-APC-Hot_vs_Cold_Aisle.pdf

https://datacenterfrontier.com/wp-content/uploads/2020/09/DATA_CENTER_AIRFLOW_MGMT_BASICS_WP.pdf