Higher density and new workloads such as AI and neural networks are requiring a rethink in the approach to data center cooling. Many in this industry are grappling with how to increase density and space while scaling vital resources. For a long time, air-cooling has dominated the data center industry. However, liquid cooling is now redefining the next-generation computing capabilities.

Introduction

When we look at the data center landscape, we can see that a lot has changed. If 2020, the COVID pandemic year, has taught us anything, it is that our digital infrastructure is more important than ever. Cloud computing, big data, IT infrastructure, power, and cooling efficiency all have new demands. More users, more data, and a greater reliance on the data center are driving forces. Working with the correct data center optimization solutions has become more important than ever with cloud technologies and the rapid growth of data leading the way in many technological categories.

Liquid cooling comes into play in this situation. More applications for the technology have been discovered. The way we deploy servers and other technologies is being influenced by real-world use cases. Covering everything from liquid cooling GPUs to deploying all-in-one computers. Storage and network architecture with built-in liquid cooling are included.

The most exciting aspect is that we are witnessing an increase in the number of instances involving liquid cooling methods. Gartner predicts that annual power expenses would rise by at least 10%. That is due to the rising cost per kilowatt-hour (kWh) and underlying demand, particularly for high-power density servers. Liquid cooling can help offset these increased power costs.

Ensuring that your data center has the best and most efficient means of cooling should be a top focus. Computers and cooling systems account for the majority of direct electricity use in data centers.

Some of the world’s largest data centers operate tens of thousands of IT devices that need more than 100 megawatts (MW) of power – enough to power over 80,000 American homes.

All of this means that the way we optimize and cool our data centers must change and evolve.

Cooling An Entire Digital Infrastructure

Liquid cooling is a technology that has been around for a long time. It was not however a widely deployed technology company. The rising investments in high-density technology, high-performance computing are making it an increasingly viable solution. Furthermore, as the amount of data generated grows, so does the demand for data centers and energy. Data centers utilized 416.2 terawatt-hours of energy in 2016, accounting for 3% of global energy usage and 40% more than the United Kingdom as a whole. Every four years, this consumption is predicted to double.

According to an analysis from Technavio, liquid-based cooling is used since it is more efficient than air-based cooling. The most often used cooling technology is water-based cooling. This is a subset of liquid-based cooling. The worldwide data center cooling market for liquid-based cooling is predicted to grow over 16% through 2020.

According to Stratistics MRC, the global data center liquid cooling industry is expected to reach $0.64 billion in 2015. From 2015 to 2022, it is predicted to increase at a CAGR of 27.7%, reaching $3.56 billion. The increasing need for solutions, as well as the increasing density of server racks, are driving market expansion.

What Does This Imply For You?

Let’s take a look at the current state of data center cooling and how liquid cooling affects data center design. To begin, you’ll notice that today’s liquid cooling methods are different from those of the past, making adoption far more realistic.

Liquid Cooling Trends

Liquid cooling concepts are not new. There is a common misperception in the digital infrastructure business that liquid cooling for servers is a relic of the previous decade.

Liquid cooling was employed in mainframe computers between 1970 and 1995. Then, in the 1990s, gaming and custom-built PCs began to use liquid cooling to meet high-end performance demands. Between 2005 and 2010, chilled doors were used to provide liquid cooling inside the data center. Liquid cooling was used in high-performance computing (HPC) contexts which are designed for direct contact and total immersion liquid cooling technologies in 2010 and beyond.

Because liquid cooling is gaining popularity as a way to support modern systems. It’s critical to examine major industry trends that are affecting total cooling solutions. Over the last few years, data center technologies have received a lot of attention. Hyperscale and colocation providers are aware data centers’ design is changing.

The Game Changer

Specific adjustments in modernization initiatives within the data center impact how we build tomorrow’s digital infrastructure. Consider the following major developments:

Cooling

Convergence, edge computing, supercomputers, and even high-performance computing are all putting extra strain on their cooling capacity. According to a Markets and Markets study, the airflow management market was worth USD 419.8 million in 2016. And is predicted to grow to USD 807.3 million by 2023, with a CAGR of 9.24% between 2017 and 2023. The growing desire for lower OPEX, more cooling capacity, improved IT equipment reliability, and greener data centers are driving the airflow management industry.

The liquid cooling business is being shaped by increasing computational needs. As previously stated, the Global Data Center Liquid Cooling market was assessed at $0.64 billion in 2015 and is predicted to reach $3.56 billion by 2022. The growing need for environment-friendly solutions, as well as the increasing density, are driving the market forward.

Power

Technology and business leaders are encouraged to supply more technology solutions while maintaining efficiency levels. The problem is that data center electricity usage continues to rise around the world. According to a recent US Department of Energy analysis, data centers in the United States are expected to need more energy in the future, based on current trend predictions. Since the year 2000, this trend has been progressively increasing.

More enterprises are leveraging data center colocations and even the cloud to satisfy their expanding demands, according to recent trends. Energy efficiency and data center management are critical planning and architectural factors in this process. To begin with, you want your solutions to be cost-effective and growth-oriented. Second, you’re attempting to reduce management overhead while increasing infrastructure efficiency.

Several factors state that more enterprises will move their environments to a data center. This might be a business, a colocation facility, or even the cloud. For a variety of reasons, energy efficiency and data center management will continue to be important factors. Not only are data center managers attempting to reduce expenses, but they are also attempting to reduce administrative problems and increase infrastructure efficiency. And liquid cooling presented a technology to investigate and adopt with infrastructure efficiency to support these new initiatives.

Cloud

The 2021 AFCOM State of the Data Center Report, found out that 58% of respondents reported organizations moving from public cloud to colocation or private data centers. It’s important to note that the cloud isn’t going anywhere. However, there are still real concerns about how enterprises want to use cloud computing. So much so that a new position has been created to deal with cloud costs and ‘sticker shock.’

According to a recent blog, the huge cost reductions were realized by switching from up-front CapEx investments in information technology to subscription mode are muddled by rising monthly fees for services that no one knows where or when they are used. FinOps was developed as a result of this new technology and operational discipline. People in this field use tools and innovative approaches to track, measure, and cut the costs and benefits of cloud computing. The opinions of FinOps practitioners provide a good picture of what lies ahead in the cloud.

A survey with 58% of respondents saw workloads repatriated from the cloud to on-premise data centers or colocations this year. This shows that most companies are still figuring out what belongs in colocation and what belongs on the cloud. The good news is that these activities are beneficial to people of all ages. Workloads that belong in the cloud will be better provided, while dedicated resources that are costly in the cloud will be brought in-house. This means that more companies are relying on their data centers or data center partners to host apps, data sets, and even new types of workloads that previously remained in the cloud.

Supporting IT Developments

It’s crucial to remember that data centers are responsible for supporting new and developing use-cases. Colocation and hyper-scale providers now enable certain advanced solutions to typical converged, hyper-converged, cloud, and even virtualization systems. High-performance computing (HPC), is used for research and data analysis. These systems are being used within the confines of traditional data centers. Cooling HPC systems necessitates a different method. For example, in 2018, data center leaders at the National Renewable Energy Laboratory’s (NREL) Energy Systems Integration Facility implemented a permanent cold plate, liquid-cooled rack solution for high-performance computing (HPC) clustering as part of a partnership with Sandia National Laboratories (ESIF).

This revolutionary fixed cold plate, warm-water cooling technology, and manifold design make service nodes easy to access. It can also reduce the need for auxiliary server fans. For the first installation and evaluation, Sandia National Laboratories chose NREL’s HPC Data Center. The data center is liquid-cooled and equipped with the necessary instruments to watch flow and temperature changes during testing. The deployment focused on three essential components of data center sustainability to support the initiative:

-

Cool the information technology equipment using direct, component-level liquid cooling with a power usage effectiveness design target of 1.06 or better;

-

Capture and reuse the waste heat produced; and

-

Cut the water used as part of the cooling process. There is no compressor-based cooling system for NREL’s HPC data center. Cooling liquid is supplied from cooling towers.

HPC isn’t the only place where liquid-cooled data centers and specific use-cases are being developed. New liquid-cooled systems are being investigated to meet emerging requirements in machine learning, financial services, healthcare, CAD modeling and rendering, and even gaming, to preserve sustainability, reliability, and the highest levels of density.

AKCP Supports The Evolution Of Data Center Cooling

In today’s data center, monitoring and alarm are required for any system. AKCP Monitoring Solutions offers monitoring and alarms for temperatures, flow, pressures, and leak detection, as well as the ability to report into data center management software suites.

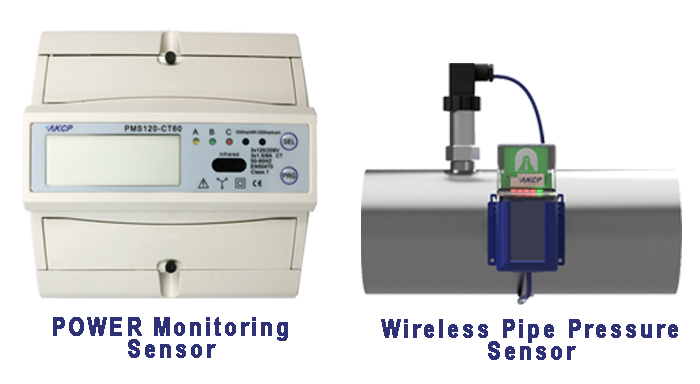

AKCP POWER SENSOR AND WIRELESS PIPE PRESSURE SENSOR

Power Monitoring Sensor

A power meter that can monitor and record real-time power usage must be used to monitor the cooling unit’s power. The AKCP Power Monitor Sensor provides critical data and allows you to remotely monitor power, obviating the need for manual power audits and delivering instant notifications to possible problems. SensorProbe+ and AKCPro Server live PUE calculations may also be utilized with power meter readings to assess the efficiency of power use in your data center. The built-in graphing tool may be used to display data gathered over time using the Power Monitor sensor.

Wireless Pipe Pressure Monitoring

The pressure in the tank was monitored by an automatic pressure relief valve with a pressure sensor. Digital pressure gauge for monitoring all kinds of liquids and gasses. Remote monitoring via the internet, alerts, and alarms when pressures are out of pre-defined parameters. Upgrade existing analog gauges.

Reference Link:

https://prasa-pl.com/blog/evolution-of-cooling-technologies-in-data-center-space/

https://datacenterfrontier.com/the-evolution-of-data-center-cooling/

https://submer.com/blog/the-evolution-of-datacenter-cooling/