Covid-19 continues to disrupt the lives of people all around the world. Going online to conduct daily business has increased tenfold. More and more people are working from home, and reliable data centers are needed more than ever.

After all, the information that used to be stored in books, documents, and disks is now available online through a device that fits in the palm of a hand. Even more surprising, most of this information isn’t stored in that phone, but rather relies on a connection to cloud-based network services. All of that information needs a place where it can be stored for retrieval. The data is stored electronically in computer hardware that takes up physical space in racks of servers that are continuously processing and storing information. At first glance, organizing one of these data centers seems simple… Just line up the server racks, install a cooling system, and connect the company to the new network.

When planning a data center, even a small one, there is always a conflict between the need to save money and the need to have more efficient cooling systems. This article will give data center managers information on how to minimize mechanical cooling power consumption through better cooling systems. Maximizing airflow management will allow for better return air temperatures and a larger change in temperature (ΔT) across the cooling unit, resulting in more efficient operation of the cooling unit. This will allow a reduction in the number of cooling units and a reduction in energy consumption.

Planning a data center is a complex endeavor. Like any other electronic devices, these servers and network devices give off a lot of heat. These heavy electronics require significantly advanced scientific cooling innovations to maintain optimum operating temperatures.

What Goals Should Data Center Needs?

Capacity, cooling, budgeting, efficiency, and power should all be considered when planning to build a data center. Ignoring these issues can expose the servers to a risk of failure.

Major considerations for designing a data center

Ensure technical capacity: Plans for a data center must include estimates of how much data can be stored and processed. Also, plans must ensure that room for possible future growth is considered. Initially, not all of the equipment will be acquired, but eventually, business expansion will require more space.

Account for thermal needs: These facilities house equipment that generates a tremendous amount of heat, which require cooling systems for heat dissipation. Cooling mishaps due to improper design can cause servers to quickly overheat. Most layout solutions should include hot-and-cold-aisle layouts and liquid cooling methods that utilize the best possible airflow to transfer heat away from network equipment.

Apply the most efficient power consumption: Data processing and cooling methods are big energy drainers. In fact, nearly two percent of United States energy consumption is directed to data centers. An effective data center power and cooling design will optimize the cooling techniques and power distribution efficiently, resulting in less energy use and reducing the carbon footprint.

How Does Heat Affect My Data Center Cooling Design?

Reducing the heat from servers is one of the most important concerns of data center maintenance. Large data centers use state of the art airflow techniques and evaporation-related cooling systems. The most common method in regulating load temperature is via airflow.

Regulating airflow involves several concepts which include

Moving heat away from components: The basic function of airflow is to remove heat away from the working equipment. Guaranteeing that all spaces that have adequate airflow is essential to good cooling.

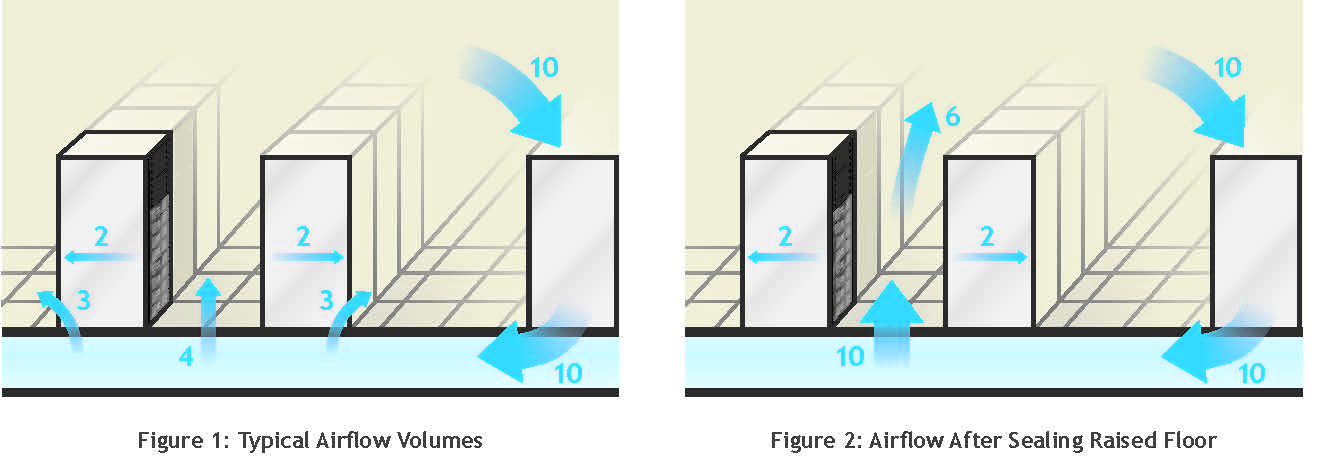

Having raised floor tiles: If the facility has a hot and cold aisle layout in place, putting raised floor tiles in cold aisles can increase airflow, helping with the cooling. Generally speaking, raised flooring do not have the same benefits in hot aisles.

Implement data center HVAC: when air flows throughout the facilities, it is worth noting that warm and cold air should not mix. Allowing hot air to absorb the cold air makes it more difficult for the cooling system to work, which is against the principles of air-based systems. By using curtains or barriers to prevent mixing, aisles retain their constant temperature and run more efficiently. It’s important to note that either hot or cold aisles should be isolated, while shielding both generally will not offer any benefit over shielding only one.

Because of the continued growth of major data centers, and concerns over their power consumption, large data centers receive much of the attention. Smaller data centers, however, such as a single server in an office building basement, also constitute a significant portion of the data center sector — approximately 40 percent of all server stock — and consume up to 13 billion kilowatt-hours (kWh) annually. Because smaller networks might not require the same intensity of cooling, these systems often do not employ advanced cooling methods, relying instead on standard, inefficient room-cooling techniques.

While it may appear that directly powering a cooling system may consume more energy, electronics often produce concentrated areas of heat. Cooling an entire room to the point that the electronics need, especially without redirecting the heat away from the air intakes, is an extremely inefficient model. Cooling systems designed to target precise areas of the rack, and using scientific principles to make airflow more efficient, are definitely preferred. The cost of energy used for specific cooling systems will very likely end up being less than the power required to cool an entire room.

Complicated cooling techniques, regardless of method, can experience breakdowns that imperil the equipment. Blower motors and water pumps can malfunction, stagnating the fluids that transport heat away from components. Improper maintenance can allow dust and dirt to block essential airflows. Sensors can register inaccurate readings. In any of these situations, temperatures can quickly reach dangerous levels and damage hardware, which is why regular monitoring and maintenance are integral to keeping a data center running.

What Is a Hot and Cold Aisle Layout?

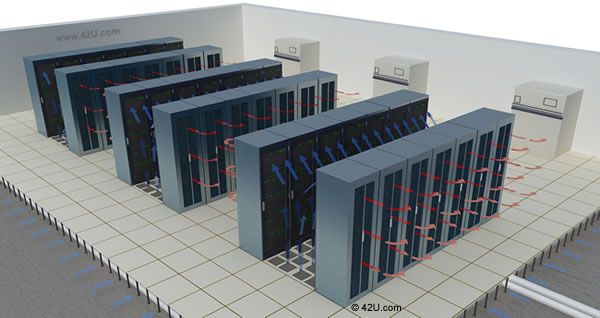

Hot and cold aisles are the most popular cooling layout for data centers. A server is designed to draw in room air through the front, cool necessary components, and then expel the hot air out the back. Understanding this, it is clear that putting the front of one server near the back of another server will draw in already-heated air. This process fails to provide the required cooling while also warming the air in the room as a whole.

To solve this, engineers and technicians arrange rows of servers in hot and cold aisles. Two servers will face each other across an aisle, providing a pathway intended only for cool air, which the servers draw in. Then, the back of the machines will form an aisle facing the back of other devices as well, expelling hot air from both into the same area. In this way, hardware only draws in cool air from the cold aisle. Warm air can be cooled adequately before being recirculated to the servers.

Some large data centers can use this excess heat for their own purposes. When located in cooler climate areas, rather than considering the hot air a mere byproduct that requires further power to cool, some centers can direct this air through ductwork, heating other parts of the building. This provides a natural cooling/heating method that saves energy otherwise required for general heating purposes.

If the heat is not sent throughout the building, it must be treated in some way before reentering the system. Some systems use heat condensers to cool the air before sending it to the room. Others have used geothermal principles, where the temperature in the ground stays relatively the same, regardless of the weather on outside. By sending the air through underground ductwork, the air is cooled through natural temperature changes, only requiring energy for the fans to draw the warm air through the system.

Data center planners must find efficient means of cooling the servers. Some operators avoid spending resources on energy-saving equipment because of its high cost. In trying to save money, they end up paying more, as ineffectual equipment can cause systems to breakdown more quickly, resulting in more expenses.

How Maximizing Return Temperature Affects Efficiency

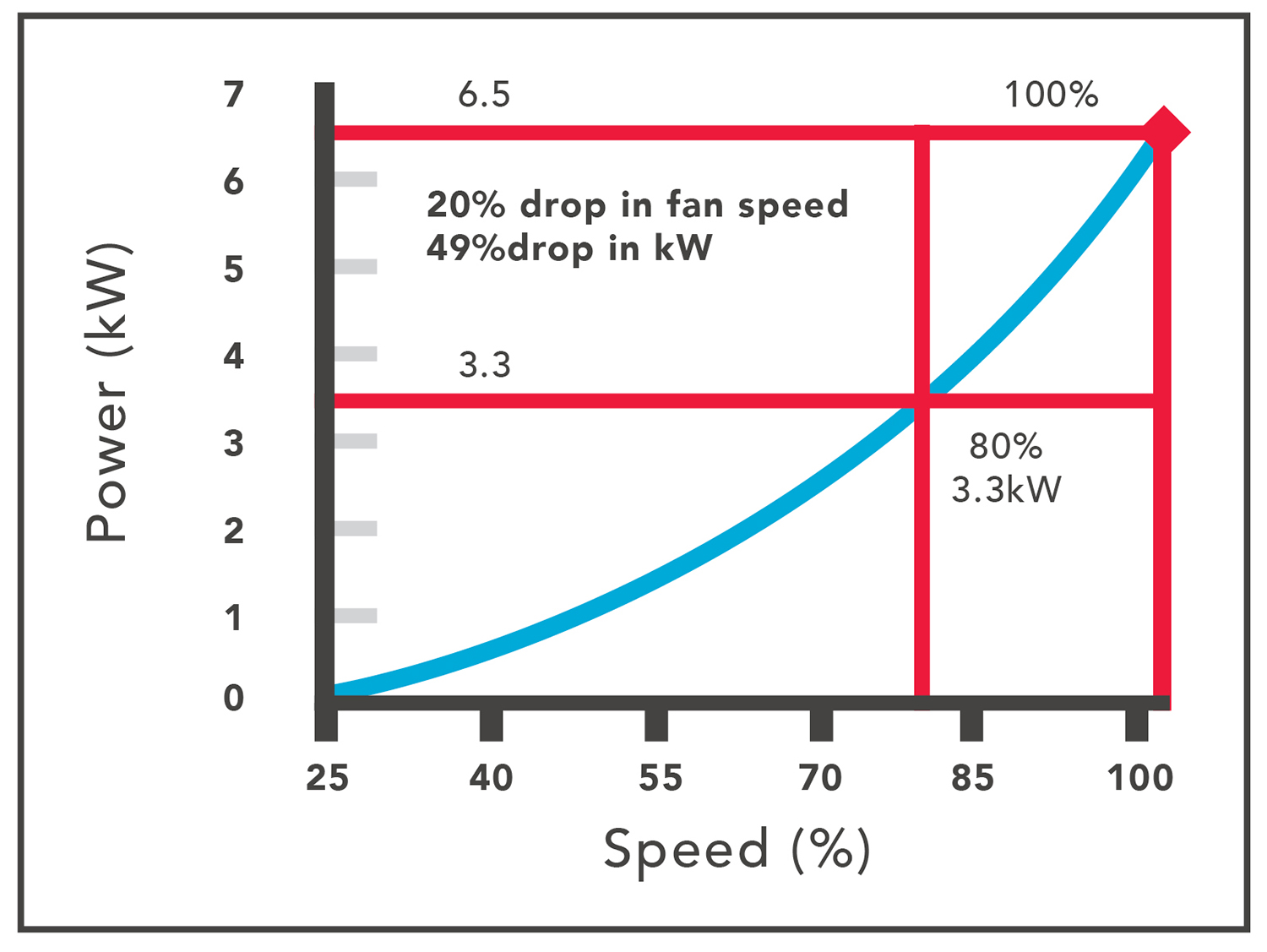

One of the most important elements of data center temperature monitoring is maximizing the return temperature. Close attention to air density and temperature can make a significant difference in efficient operations. Tracking density and temperature prevents warm air from heating the cold and draws air through the system more efficiently, possibly cutting energy consumption by up to 20 percent thus requiring fewer cooling mechanisms.

Matching Cooling Capacity

Matching cooling capacity to the specific needs of the equipment, must be calculated so that cooling air is delivered more efficiently. The idea here is to measure the heat in the system, optimize cooling directly to the hot areas, and utilize the most effective systems for delivering cooling air flows for that section. The more concentrated the cooling, the less air is required in the system, saving money. At first, this will entail a significant amount of money but in the end, the investment will pay for itself.

Planning Steps to Avoid

When planning a data center, what not to include is as important as what to include. More often than not, companies try to cut corners to save money. This practice, however, can result in a lot of headaches. Setting up a proper plan is essential to securing the long-life and effectiveness of the data center.

Some of the most damaging or inefficient ways to plan the center

Flooding cool air in the whole room: filling the whole room with cool air is inefficient and unproductive. A small data center with a few racks, with the appropriate cooling techniques, will help minimize spending, while also preserving your equipment. Flooding an entire room wastes energy as there are parts of the room that do not need cooling.

Keeping inconsistent temperatures: Data systems function best when held at a constant temperature. This allows for more control over the environment and prevents moisture and condensation from building up within the electronics. For the best results, use a cooling technology that monitors the status of the equipment and supplies cooling as necessary, to provide a steady temperature.

Overcooling: operating the cooling systems at maximum level consumes a lot of energy with no added benefits. It’s best to check the manufacturer’s recommended temperatures and keep within range.

Losing out on efficiency upfront: While acquiring cheaper cooling methods may save money, eventually it will be the servers that suffer the most. It will cost more to replace the servers, losing those savings. Find a system that not only fits the needs of the data center but will also extend the life of the servers.

Maximizing all of the space at once: when the data center has plenty of space, it is common to fill all those spaces with server racks. This can hinder the flow of cool air and reduce room for new equipment with updated technology. Additionally, it will take more cables to connect all the servers into one centralized control locations.

Implementing patchwork assembly: always have a plan for servers’ arrangement. Filling rows of racks is easy to plan, but, without proper planning, that practice can quickly can lead to crossing wires that form air dams, wasted space usage, poor air circulation, and other problems.

Conclusions

Data centers play an important role in society, providing interconnections with other networks to share information essential to our daily lives. Most people do not know the endless processes of data center systems that bring them the many forms of data they are looking. There is a huge reservoir of complexes supporting the storage and use of that information.

Without proper planning and technology, the servers can quickly decimate a budget with inefficient cooling or maintaining services. To keep the equipment in good working order and perform at a top-level, use data center best practices.

AKCP Provide a complete monitoring system for data centers to ensure environmental conditions are within required boundaries.

Reference links:

https://journal.uptimeinstitute.com/implementing-data-center-cooling-best-practices/

https://www.dataspan.com/blog/data-center-cooling-best-practices/