In order to ensure that a Data Center is functioning properly, it is important for the temperature to be well-regulated at all times. Excessive heat puts a Data Center at risk of failure. This failure can lead to downtime, which causes lost data and revenue. Based on a Gartner study, it is estimated that the cost of one hour of downtime can amount to up to $336,000 per hour or $5,600 per minute. Maintaining temperatures in a Data Center within recommended guidelines is crucial in preventing the company from losing hundreds of thousands of dollars in revenue.

The TC 9.9 data center temperature guidelines are closely followed by the industry; however, they are not a legal standard. ASHRAE or the American Society of Heating, Refrigerating and Air-Conditioning also published the 90.1 “Energy Standard for Buildings – Except for Low Rise Buildings,” which is often referred to by state and local building departments. It contains highly prescriptive methodologies for data center cooling systems. However, these prescriptions could be potentially in conflict with facilities adopting more advanced IT equipment.

ASHRAE Standard 90.4-2019, Energy Standard for Data Centers indicates the minimum energy-efficiency requirements for the operation and design of data centers. It also takes into consideration their unique needs compared to other buildings. It was developed under the premise that data centers are highly critical facilities that require meticulous attention to the possible impact brought by their requirements. Despite a few updates, it is still in compliance with Standard 90.1 for service water heating, building envelope, lighting, and other equipment.

How Are IT Industries Affected By These Temperature Guidelines?

IT industries undergoing new construction or major renovations are the ones primarily affected by the new ranges defined in the 2011 ASHRAE guidelines. The updated allowable ranges for classes A3 and A4 are designed to eliminate obstacles to new data center cooling strategies such as free-cooling methods. Free-cooling utilizes a facility’s local climate by making use of the outside air to cool IT equipment without using mechanical refrigeration. An example of this execution is using filtered outside air drawn directly to the data center. Adhering to the new ASHRAE guidelines may maximize free-cooling in more climates and allow the data center to be cooled without mechanical refrigeration for a longer period. Less use of refrigeration equipment means lower operating expenses. Capital investment can also be saved by eliminating or significantly reducing refrigeration equipment.

It is vital to take note that in order to maintain consistent, efficient IT operations, the implementation must be carefully done. Despite the change of allowable ranges, the ASHRAE recommended operating range remains unchanged and constant throughout all operating classes. If unavoidable, IT equipment should be kept outside the recommended range for a limited time only to avoid having potentially questionable equipment.

Raising a cooling system’s temperature beyond the recommended range may have undesirable consequences, including:

- Heightened fan operation within the IT equipment, which can disrupt cooling system savings and increase noise levels

- Warning/ alarm notifications from IT equipment operating beyond recommended levels

- Reduced lifespan of IT and cooling equipment due to prolonged exposure to higher operating temperatures

Shifting to free-cooling methods requires drastic renovation to existing data centers or meticulous facility planning if constructing a new one. However, free-cooling under the ASHRAE ranges can lead to significant CAPEX and OPEX savings if executed correctly.

Prescribed Computer Room Temperature

Data centers and server rooms have a combination of hot and cool air. Server fans thrust out hot air while running. Meanwhile, cooling systems bring in cool air to negate the hot air produced by the exhaust. Finding the right balance between hot and cool air is essential in avoiding data center downtime.

The range that ASHRAE recommended for optimized maximum uptime and hardware life is between 64° to 81°F. This range provides the best usage and accommodates sufficient buffer room in case of an air conditioning malfunction incident arises.

Server manufacturer Dell states that the ideal temperature for their servers is 80°F (26.7°C). Data centers and companies with server rooms using higher allowable temperatures do not need to cool them as much as they did previously. Less cooling time means reduced power consumption and additional monetary savings.

Unfortunately, higher operating temperatures can lead to less reaction time in the event of rapidly escalating temperatures due to a cooling unit breakdown. A data center filled with servers operating at higher temperatures has the risk of instantaneously experiencing simultaneous hardware failures. The recent ASHRAE regulations emphasize the importance of proactive environmental temperature monitoring inside server rooms.

Prescribed Computer Room Humidity

Relative Humidity is the ratio of the amount of water vapor present in the air at a particular temperature point to the maximum amount that the air could hold at the indicated temperature. It is expressed as a percentage, and a higher value means a more humid air-water mixture. In a server room, it is recommended that ambient relative humidity levels are kept within the range of 45% to 55% for maximum performance and stability.

ASHRAE 2016 temperature guidelines maintain the same recommendation, with 50% humidity. The minimum is recommended at 20%, while the maximum is 80%.

Dry air caused by low humidity can lead to an electrostatic discharge (ESD), which can destroy essential server components. Too much humidity will lead to condensation, which causes corrosion in the hardware and overall failure of the equipment.

Key Topics In Data Center Temperature Standards:

Power Usage Effectiveness (PUE)

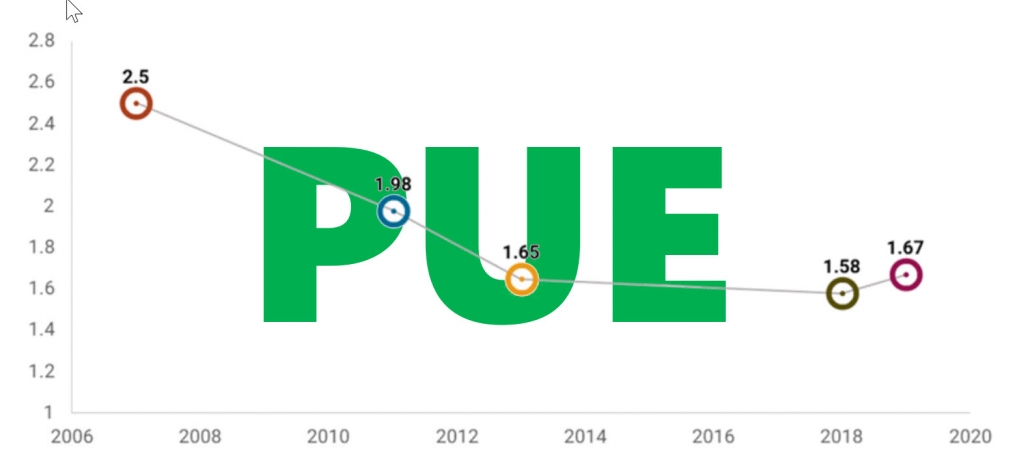

The initial PUE measurement determined a data center’s energy efficiency through a one-time measurement of power in kilowatts. However, it tends to be misleading because some facilities can claim low PUEs during a cold spell when the need for cooling energy is minimized.

It was updated to PUE version 2, which took into account annualized energy instead of power. It categorized PUE into four, PUE 0-3, and three specific measurement points. If the data center does not have the needed energy meters at the specified measurement points, they use PUE, which measures power based on the highest draw during warm weather to provide a more accurate representation. The other three PUE categories are based on annualized energy (kWh).

Dry Bulb Thermometer

The “Dry Bulb” thermometer readings are not influenced by the air humidity level; instead, it measures ambient air temperature. It is the most commonly used type of thermometer indicated in the prescribed IT equipment operating ranges. Dry-bulb temperature or “air temperature” is measured with a Dry Bulb thermometer exposed freely to the air but protected from radiation and devoid of moisture.

Wet Bulb Thermometer

The “wet bulb” thermometer is equipped with a bulb covered by a muslin sleeve that is kept wet with distilled and clean water, exposed to air, and free from radiation. The reading is obtained by a standardized velocity of air flowing over the instrument, causing evaporation. The rate of evaporation and the corresponding cooling effect is affected by the air moisture content. It will indicate a lower reading in drier air conditions due to the evaporation of the moisture around the bulb.

Dew Point Temperature

The Dew Point is the temperature where the air becomes completely saturated, and the water vapor begins to condense out of the air. Its effect is usually observed when condensation forms on an item that is colder than the dew point. The humidity range in IT equipment operations should always be specified as “non-condensing.” It is an essential consideration for sensitive electronic equipment that needs to be kept free from moisture to remain operational.

Recommended vs. Allowable temperatures

The “recommended” temperature range remains relatively constant at 64.4-80.4°F (18-27°C ) as of 2011. However, the recent “allowable” A1-A4 ranges came as a shock to the industry.

The “allowable” data center classes were established to broaden the options and provide additional information on data center temperature regulations. Still, it introduced a more complicated process for data center operators when balancing reliability, optimized efficiency, reduced ownership costs, and improved overall performance. Allowable temperatures are drafted to accommodate operating ranges of newly introduced, more-thermal resistant equipment.

Room vs. IT Inlet

In the first edition of the summary of Temperature Guidelines, the temperature of the “room” was initially measured in correlation to ideal data center conditions. However, “room” temperatures are unreliable since different areas across the whitespace can have substantially varying temperatures. In the second edition, an amendment was made in terms of how temperature is measured. The guidelines referenced the temperature of the “air entering IT equipment.” This emphasized the importance of airflow management in higher IT equipment densities and the suggested solution of a Cold-Aisle/ Hot-Aisle cabinet layout. To maximize temperature monitoring capabilities, thermal sensors placed per aisle and per cabinet are highly recommended.

Environmental Specifications

The telecommunications industry crafted environmental specifications prior to the release of the first edition of the ASHRAE TC9.9 Thermal Guidelines in 2004. The NEB’s — or Network Equipment-Building System — environmental specifications lays out physical, electrical, and environmental prerequisites for the exchanges of telephone system carriers. ASHRAE has then expanded its environmental operating parameters to be more aligned with NEBS specifications including the recommended numerical values. The 2011 TC9.9 updated A3 specifications have become parallel to the previously established NEBS allowable temperature range of 41-104°F (5°C- 40°F).

References:

https://datacenterfrontier.com/understanding-data-center-temperature-guidelines/

https://avtech.com/articles/4957/updated-look-recommended-data-center-temperature-humidity/

https://www.ashrae.org/about/news/2016/data-center-standard-published-by-ashrae

https://www.chiltrix.com/documents/HP-ASHRAE.pdf