Replacing a centralized electrical distribution system with a distributed power architecture sets up a facility to handle unexpected demand spikes.

The Covid-19 pandemic has radically changed the way people are using the digital infrastructure. The stay-at-home advisories and social distancing measures have increased the demand for a reliable internet speed.

Whole industries have moved vast numbers of employees to remote work. Internet providers are now improving network services that allow operations to continue with minimal disruption. According to an IEA report, between February and mid-April 2020, global internet traffic surged by 40 percent. Microsoft Teams saw a 70 percent increase in use for March alone. As the old year fades away, one thing is clear: getting back to the old normal is no longer possible. It has been replaced by a new normal. The cloud is considered essential and digital tools like video conferencing and virtual services like telehealth critical.

Mobile internet and social media have empowered people to become creators of data. Today, nearly 500 million photos are uploaded on Facebook and Instagram and roughly 500 thousand hours of video is uploaded to YouTube daily. More videos are uploaded to YouTube in one month than the three major US networks created in over 60 years. These figures give a view of the incredible amount of data that users generate on a regular basis.

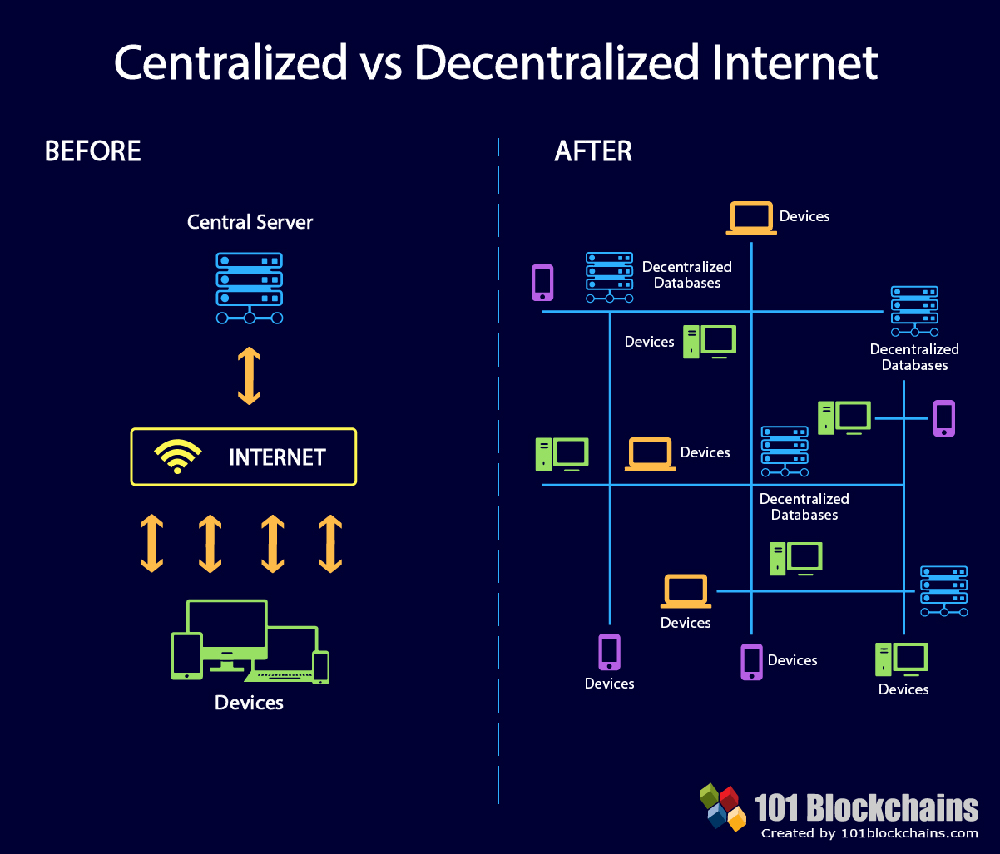

Transferring all the data generated at the edge to the central cloud, processing and analyzing it on servers, and then transporting it back to edge devices is not feasible. Centralized cloud computing has two significant limitations in meeting the demands of a connected world: bandwidth and latency.

Using a central cloud bandwidth will be the choke point for the growth of IoT. Even if the network capacity increases to cope with the data, remote processing of data in the central cloud is inhibited due to latencies in the long-haul transmission of data. It is clear the need for a new computing model to cope with the hyper-connected world.

The impact of this digital transformation gives a broader set of challenges for data center operations.

- How can data centers maintain resiliency, efficiency, and reliability amid the ever-growing demand for cloud-based, business-critical applications?

- How can data centers ensure their facilities are well prepared to scale operations in response to rapidly changing and continuously increasing capacity demands?

Facing these challenges requires that data center operators address the critical role power architectures play as enabling or limiting factors for expanding data center capacity.

Maximum Capacity: The Limitations of Centralized Power Architecture

There are a few main elements that typically limit data center expansion:

- physical space

- power system capacity

- cooling capacity.

A more in-depth look reveals that whether or not power acts as a constraint depends on the type of distribution architecture a data center employs.

For most data centers, a centralized power architecture is the driving force of the facility. In this situation, a centralized uninterruptible power supply (UPS) system brings power from the AC utility grid to DC. The power is then stepped down during a series of conversions to provide the computing equipment’s power. In the event of a significant outage like utility failure, backup power is distributed from battery banks at the central location to critical equipment across the data center.

Typical centralized distribution of power in a data center

Most data centers utilize such centralized power infrastructures, but they are not reliable. When it comes to scaling to cope with increased processing needs and capabilities, there may not be enough space to add more electrical configuration in the power room.

Centralized UPS power and backup capacity support a data center’s added load based on predictions made at the first facility design stages. However, because computing and power equipment are not integrated all at once – especially in collocation data centers – capacity limits may not be as expected.

This also presents problems as data centers shift to higher-capacity and higher-density networking equipment to meet the increasing computing needs.

With that in mind, data centers that depend solely on a centralized UPS architecture are in danger of exhausting their power capacity. They may be limiting their ability to scale rapidly to meet future demands.

Beyond Capacity: The Benefits of a Decentralized Power Architecture

How can data centers overcome power capacity limitations? Making sure that their infrastructure can respond to the increasing demand while still maintaining maximum uptime and resiliency? The solution is adopting a decentralized DC power architecture.

Compared to a centralized UPS distribution, backup power in a decentralized system is spread evenly throughout the facility. Designed to operate near the critical load equipment. By clustering together loads and placing battery reserves close to the physical computing equipment, decentralized systems minimize potential power outages. This Improves the reliability of power amid increasing demand without compromising the entire site.

Instead of connecting numerous AC-to-DC conversions, distributed power places smaller batteries and rectifiers directly inside cabinets. Reducing the number of power conversions required to step down utility power to the servers’ needs improves efficiency, reliability, and scalability.

The most critical feature of a decentralized UPS architecture is it allows data centers to meet increased demand by distributing equipment-specific resources that can cope according to needs. New cabinets can expand capacity as necessary, while additional rectifiers and battery modules can increase power for servers added to open racks. By using DC power components that connect directly to the AC utility feed, a decentralized power structure allows facilities to optimize stranded white space and maximize infrastructure without placing any additional burden on their existing UPS system.

A distributed DC power structure allows data center operators to add power and load concurrently. Unlike a centralized UPS, which limits how much and where a data center can grow, a decentralized power architecture is designed to scale. This is what data center operators need in a world of rapid, unexpected spikes in customer demand.

Preparing for the New Normal

With emerging technology, cloud computing becomes a new paradigm for the dynamic provisioning of various enterprises. It provides a way to deliver the infrastructure and software as a service to consumers in a pay-as-you-go method. Such typical commercial service providers include Amazon, Google, and Microsoft. In cloud computing environments, large-scale data centers contain essential computing infrastructure.

While it is unclear how long the COVID-19 pandemic will last, it’s clear that there is nothing less than a paradigm shift in how populations worldwide live, work, collaborate, and communicate. The digital transformation of the past eight months has only served to jump-start a broader set of long-term trends already underway, including advancements in AI, automation, and data analytics, as well as the evolution of 5G, IoT, and smart cities.

Data centers face long-term challenges with a new sense of urgency. Now more than ever, data center operators need to redesign the power architectures at the core of their facilities, in the face of what is sure to be an increasing demand on capacity. Introducing a distributed power architecture is an essential step towards developing data center infrastructure with the flexibility to adapt to whatever comes next.

To fully utilize the underlying cloud resources, the provider has to ensure that services are delivered to meet consumer demands. These are usually specified by (service level agreements) SLAs while keeping the consumers isolated from the underlying physical infrastructure.

The challenge here is to balance the power allocation resources and make appropriate decisions since the workloads of the different applications or services fluctuate a lot as time elapses. Thus, the potential benefits of shifting to decentralizing the cloud could provide the opportunity to address the issues of high-performance concern of the service provider.

Conclusions:

The pandemic has accelerated the need for a shift to transform tens of billions of devices from a challenge to an opportunity, releasing the power of computing devices at the edge. A practical solution is to build a fully decentralized architecture where every computing device is a cloud server. Edge devices process data locally, communicate with other devices directly, and share resources with other devices to remove the stress on central cloud computing resources. This architecture is faster, more efficient, and more scalable. A decentralized architecture is more private since it minimizes central trust entities and is more cost-efficient since it leverages unused computing resources at the edge.

A decentralized resource management approach can be made for data centers that use virtual machines to host many third-party applications. The system models are defined and described in detail. The design of the decentralized power migration approach, which considers both load balancing and saving of energy costs by turning some underutilized nodes into a sleeping state. Performance evaluation results of the simulation experiments illustrate that the approach can achieve a better load balancing effect and less power consumption than other strategies.z