What is a data center digital twin? A physics-based digital twin is well-established in the manufacturing and aerospace industries. Is it the future of facilities planning? Does your data center need a digital twin?

Data centers are made to suit certain business needs at a given time, but these needs rarely stay the same. Business objectives shift, technology advances, and new legal compliance frameworks are put in place—all at what seems to be a constant rate. CIOs and CTOs must adjust and react to changing requirements in a variety of ways, so even though the data center’s mechanical and engineering architecture may not change significantly over the length of its existence, the IT configuration is constantly changing.

Owners and operators are consequently forced to make changes to a live environment, frequently without being able to foresee how the facility will respond. Making the wrong choice in this situation could impede corporate operations or, in the worst case, fail. Fortunately, it is possible to evaluate any suggested modifications in a secure setting before they are put into effect.

Enter the digital twin

In the manufacturing and aerospace sectors, the concept of a “digital twin” is well-established. According to a Gartner survey, 48 percent of firms implementing IoT claimed they would be employing digital twins by the end of 2018. But what exactly is a digital twin, and what advantages might they have for the sector of data centers?

A dynamic, digital twin is a depiction of an actual object connected by measurable data like temperature, pressure, vibration, etc. The operator or engineers can learn about the performance of the thing from this digital duplicate, which updates and ages as the original object does.

The case of an airplane engine serves as a suitable illustration. A General Electric engine will send almost two terabytes of data to a digital twin in a General Electric data center during a journey between Frankfurt and London. A maintenance team is sent to the plane’s destination airport with the necessary parts to execute a repair if the digital twin notices any trends in software data that point to a probable flaw. The design team is also given information on actual operating conditions, which is used to inform simulations and subsequently enhance designs for further iterations.

Digital twins can have a similar impact on data centers, although the type of model used is slightly different. According to Boeing CEO Dennis Muilenburg, data-driven digital twins like this will be “The biggest driver of production efficiency improvements for the world’s largest airplane maker over the next decade.”

How digital twins can improve data center operations

Singapore’s Red Dot Analytics has developed a digital twin platform driven by AI that enables data center operators to replicate their operations, among other things, to control their carbon footprint and energy usage.

Although the idea of using artificial intelligence (AI) to control the energy use and carbon emissions of data centers has been discussed for years, its full potential has been constrained by issues with data availability and quality.

For starters, information on data center operations sometimes only covers uniform situations and is insufficiently comprehensive. Additionally, there may not be sufficient data to train machine learning models.

Datacenter operators are typically risk-averse because they worry that something could go wrong after applying recommendations from AI engines that work in a black box, even if the data issues are handled.

With a digital twin platform that enables data center operators to simulate actions aimed at improving operational and energy efficiency and understand the costs and risks of doing so, Red Dot Analytics (RDA), a deep tech company spun off from Singapore’s Nanyang Technological University, has been addressing those challenges. Machine learning models can be trained using the data produced by the digital twin.

But creating a digital twin of a data center, complete with all of its physical assets, underlying processes, and activities, is not a simple effort, according to Wen Yonggang, the head scientist at RDA and professor at NTU.

Wen noted that most people tend to think of digital twins as just digital representations of actual physical data center infrastructure. “Different people have different interpretations of what a digital twin should be, and that has been a major challenge when working with industry partners, customers, and stakeholders,” Wen said in an interview with Computer Weekly.

That, according to Wen, is only the top layer of RDA’s digital twin platform, which also can overlay operational data for statistical analysis and diagnostics, as well as perform prescriptive and predictive functions that can transform knowledge into recommendations for data center operators.

Datacenter operators can allegedly save up to 40% on energy expenditures by using RDA’s “cognitive digital twin” apps without needing to upgrade their hardware. The cost reductions can be significant in Singapore, where cooling systems account for the majority of energy use in data centers.

Wen stated that RDA works with data center operators to understand their carbon emissions better and find possibilities to lower their carbon footprint, while others use its digital twin platform for asset management to decrease the downtime of their data center equipment.

“We’re building different use cases along the way when we work with customers, but the overall objective is to use digital twins as a decision support platform to turn the usual best practices into a more scientific way of conducting datacentre operations,” Wen said.

RDA currently counts major hyperscale cloud providers, co-location datacentre providers, and enterprises in Asia-Pacific as clients, and expects more customers to come on board in the next quarter. RDA provides a common backend engine for each customer but creates unique front-end interfaces to link its platform with each customer’s workflows.

However, due to their old data gathering methods and numerous data sources, some data center operators might not have enough data to ingest into a digital twin platform, Wen noted.

But the advantage of employing digital twins is that we can establish the digital twin with a modest bit of data and gradually improve it so that, at specific points, we may gather data from the digital twin to train the model, the author continued.

Regarding upcoming improvements, Wen stated that RDA wants to improve the security architecture of its digital twin platform and make it simpler for users to build their services and apps on top of the platform using low-code and no-code development.

“We can empower operators to do some things themselves, rather than rely on us or their service providers,” Wen said. “Additionally, we want to build more AI capabilities into our backend system and take advantage of machine learning techniques like transfer learning to make our models more robust.”

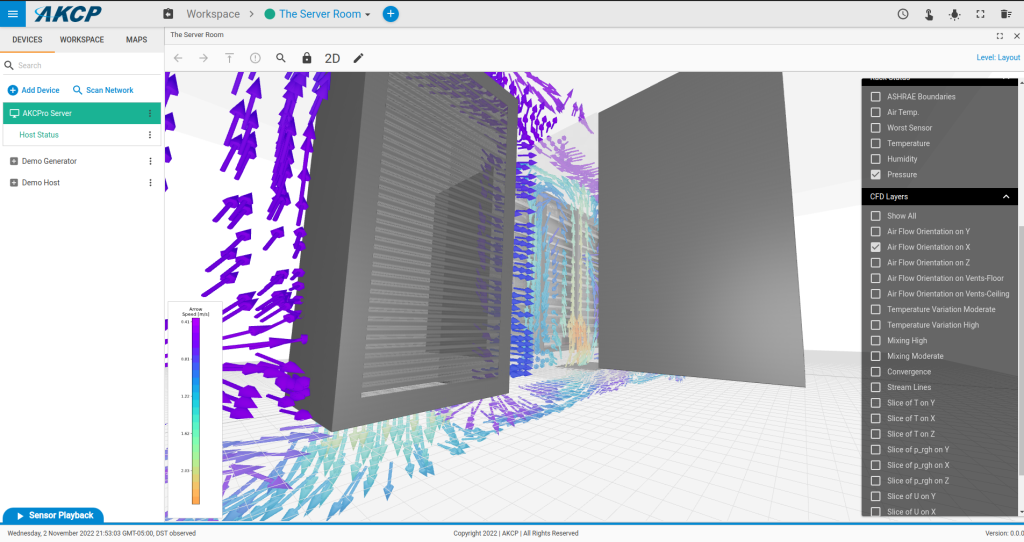

AKCP Digital Twin for the Data Center

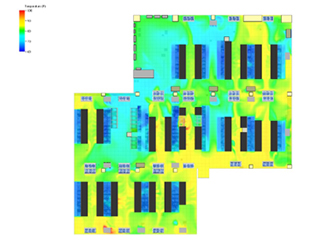

AKCP sensors, such as cabinet thermal maps, power meters and water leak sensors, are deployed in a data center to collect data on the environmental conditions. Traditionally the data was used to alert when there is a problem. Increasingly, for the modern data center, the metrics being collected by these sensors can be used to build a digital twin of the data center. For example, with AKCP’s sensorCFD, the sensor data is used to constrain a computational fluid dynamics model of the data center airflow. This is different to traditional CFD where arbitrary figures are used as the input for the rack kW and the temperatures. By using real live sensor data, the digital twin is created and a real time picture of you data center thermal performance is created.