The trend in data centers has been increased power density and equipment to cope with the worldwide demand. This results in thermal stress on installed equipment, inefficient cooling systems and increased overhead. As a response to this, it was identified that there was a need to standardize across the industry on standards and best practices. In 2002 AHSRAE created a new technical group who’s aim was to bridge the gap between the manufacturers of equipment and data center designers. The publication was called “Thermal Guidelines for Data Processing Environments”.

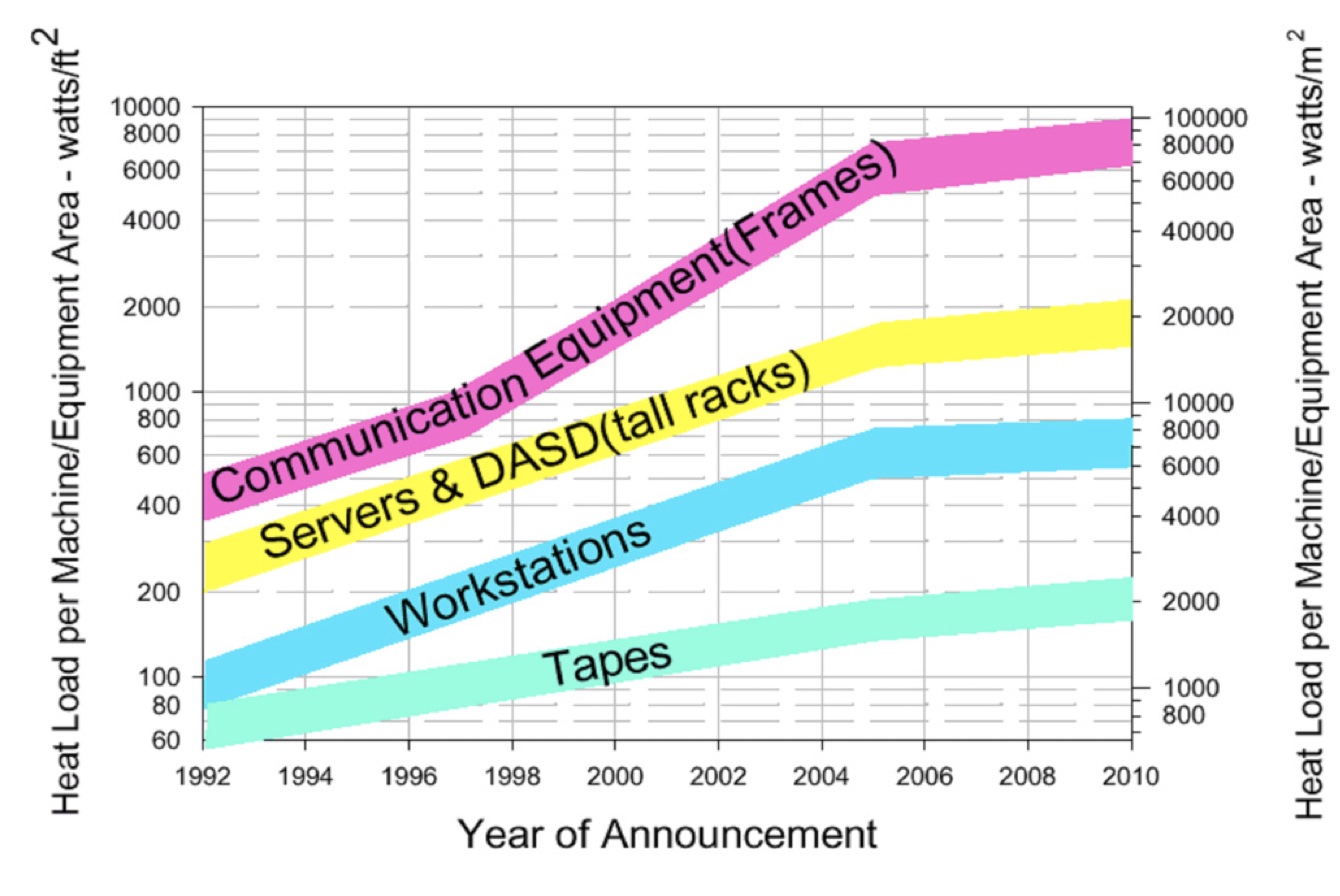

Between the years 2000 and 2005 the power consumption of semiconductors increased 60%, this means the heat produced as a byproduct of the power more than doubled during the same period. As a result significant more emphasis is being placed on the design of the data center, cooling systems and delivery of power. Additional to this, there is also a move towards higher density packing of servers within racks. This makes the use of data center space more efficient, but also increases the power needs on a per rack basis.

source: http://www.ancis.us/images/AN-04-9-1.pdf

In 2010 server power densities reached 20,000 W / m2. Not only was this driven by improvements in semiconductor technology, but also the rising cost of data center infrastructure which pushed IT facility managers to obtain the maximum output from their data center space, fully optimizing their infrastructure. This resulted in much more attention being paid to cooling and airflow in and around the data center floor, cabinets and servers. Careful attention was paid on the positioning of server air inlets and exhausts to ensure that the hot air from one server is not being sucked into the inlet of another.

Source: http://www.ancis.us/images/AN-04-9-1.pdf

Evolution of Data Center Environmental Standards

In the late 1970’s and early 1980’s the planning of a data center primarily involved checking the power was “clean”, had a properly isolated grounding, and if it would be interrupted should the facility loose main power. Cooling was rarely considered except for some isolated cases. Some companies explored cooling technologies, such as utilizing liquid cooling. However, typically the cooling methods were simply forced airflow with big noisy fans. As time went on, in some countries the costs of power were huge, and so more emphasis was placed on being able to provide only enough power for a given system configuration.

As the 1990’s progressed, power density in racks become a much bigger issue, which resulted in ensuring adequate cooling. Previously simple power factor calculations were able to determine the amount of cooling necessary. But as rack densities increased, it was no longer enough. It became important to begin considering the pattern of airflow around the data center, racks and equipment. This required more statistical data such as pressure drop, air velocities and flow resistance to be available in the design stage.

By the early 2000’s, as we already have explored above, power densities continued to increase. Thermal modeling was viewed as a potential answer for optimizing the cooling of the data centre environment. This required properly designed thermal models of the equipment coming from the manufacturers. The lack of this data meant that typically the data center would gather temperature data after construction and make adjustments based on this information.

The Thermal management Consortium

By 1998 there were a number of equipment manufacturers who formed an alliance to work together on addressing these common issues related to the thermal management of data centers. It included industry giants like Nokia, Cisco, Sun Microsystems, Motorola, IBM, Intel, Compaq, Dell and Hewlett Packard. They collaborated with uptime institute in 2000 and formed three subgroups covering :-

A. Rack airflow direction

B. Reporting of accurate equipment heat-loads

C. Common environmental specifications

These groups worked on developing the structure of documents and guidelines for the industry. This continued until 2002 when ASHRAE continued and took over their effort. Ashrae published the “Thermal Guidelines for Data Processing Environments” in 2003.

Data Center Environmental Specifications

The primary concern of monitoring and controlling environmental conditions in the data center is the maintenance of the correct temperature and humidity for reliable operation of the equipment contained within the racks. For example, the manufacturer may recommend that in a 42U rack the inlet air temperature at the front be maintained between 10°C and 32°C for the complete 2m elevation. This can pose a challenge, especially with multiple racks close together each consuming 12.5kW of power when fully loaded.

ASHRAE developed fur classes for standardizing equipment and their cooling requirements. The classes range from 1 and 2 which cover air conditioned servers, class 3 for lesser controlled environments such as office workstations, and class 4 for portable equipment, point of sales equipment and so on, who have no environmental controls.

Layout

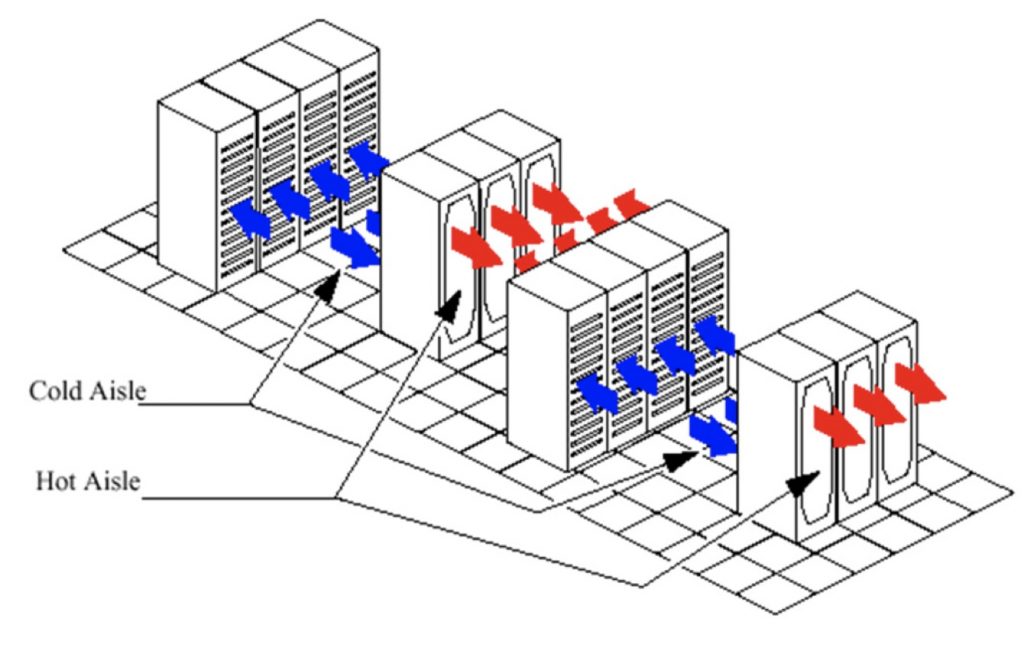

Data center designers must consider the airflow within the cabinet, and also around the whole facility. This is not only to ensure sufficient cooling capacity but also to ensure efficiencies in operation and lower power consumption for cooling systems. The PUE number can be used as an indicator as to how efficiently the power is being utilized to cool the IT equipment within the facility.

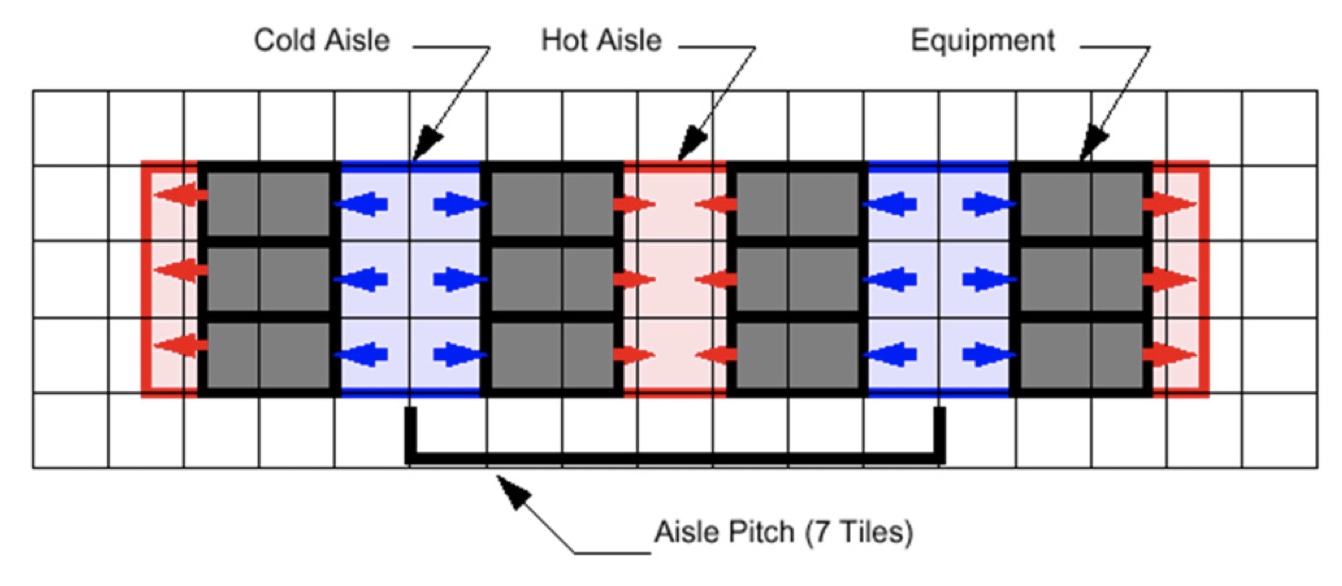

Also, the size of floor tiles for raised access flooring was standardized, so aisles could be standard widths, and cabinets fit the width of a floor tile. By manufacturers following these standards, it allows for the easier setup of hot/cold aisle containment systems, maximizing the use of the data center floor area and improved cooling efficiencies.

Source: http://www.ancis.us/images/AN-04-9-1.pdf