In the the digital age, data centers play a pivotal role in facilitating the seamless function of modern technology. As the demand for internet services continues to surge, understanding the energy consumption trends and adopting efficient strategies within the data center industry becomes paramount. This article delves into the energy consumption patterns of data centers, explores efficiency challenges, and highlights key strategies to optimize energy use and reduce environmental impact.

Data Center Consumption Trends:

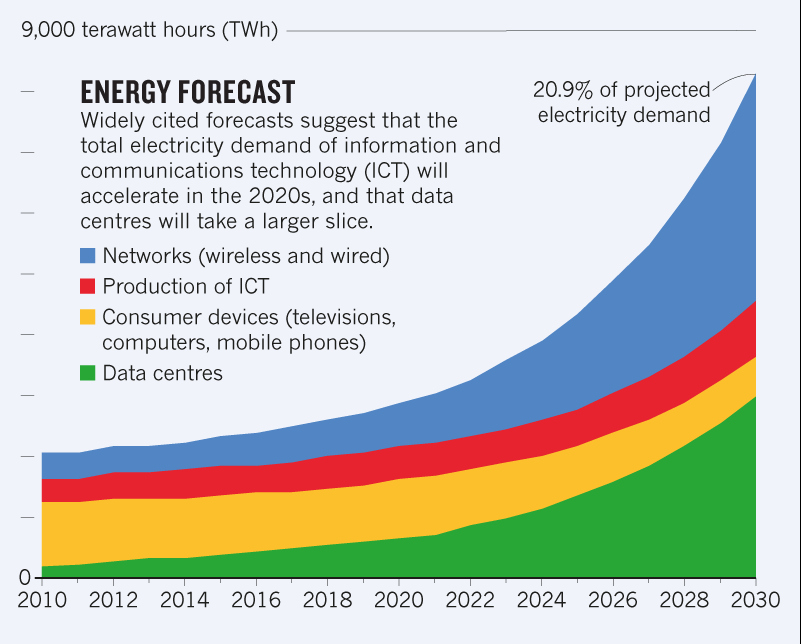

The data center industry witnessed substantial growth over the past decade, contributing significantly to global energy consumption. In 2020, data centers consumed an estimated 196 to 400 terawatt-hours (TWh), equivalent to 1% to 2% of the world’s annual data center energy consumption. Notably, the European Union alone required 104 TWh for data centers in the same year. This escalating energy demand is expected to persist due to the industry’s expansion.

Diverse Facility Sizes and Connectivity:

Data centers vary widely in size, ranging from compact 100-square-foot setups to massive hyper-scale 400,000-square-foot facilities housing thousands of cabinets. These facilities house an estimated 18 million servers globally as of 2020, compared to 11 million in 2006. The energy efficiency of servers, determined by factors like dynamic range and hardware design, significantly influences power consumption.

Efficiency Challenges and Server Utilization:

Despite advances in server technology and dynamic range, the majority of servers operate well below their maximum capacity. The most efficient servers only run at around 50% capacity, leading to wasted energy. In the United States alone, this resulted in approximately 40,000 GWh/year of direct server electricity use in 2020, with half of this energy squandered by idle servers.

Optimizing Storage Efficiency:

As data generation continues to surge, storage systems face evolving energy efficiency challenges. Hard disk drives (HDDs) and solid-state drives (SSDs) have seen efficiency improvements, with SSDs demonstrating increased capacity per watt. In the United States, storage drive electricity consumption is projected to surpass 8,000 GWh in 2020, reflecting the growing need for efficient storage solutions.

Network Energy Consumption and Challenges:

The energy consumption of networks is a complex issue, with varying estimates over the years. Challenges arise from the inclusion of mobile networks and internal data center connectivity in calculations. As data demands rise, balancing low latency with renewable energy sources poses a challenge for network infrastructure.

Infrastructure and Energy Efficiency:

Efficient data centers encompass various factors, including cooling, power distribution, lighting, and more. Power Usage Effectiveness (PUE) serves as a metric to gauge infrastructure efficiency. However, PUE alone doesn’t account for water use, IT equipment lifecycle, and other environmental aspects. Metrics like Water Use Effectiveness (WUE) and Life Cycle Analysis (LCA) provide a more comprehensive view of environmental impacts.

The Green Future of Cloud Computing:

Cloud providers are essential players in shaping the industry’s environmental impact. While cloud computing introduces efficiency benefits, transparency regarding carbon footprint remains a concern. Cloud vendors must be forthcoming about their environmental impact, given the rapid growth of hyperscale facilities.

The Future: Efficiency Challenges and Strategies:

Efficiency gains have been achieved over the past decades, but challenges remain. Data center energy usage is projected to quadruple by 2030, necessitating innovative solutions. Strategies like fuel cells, direct current (DC) architecture, and renewable energy adoption can enhance efficiency. Monitoring tools like AKCPro Server can provide real-time insights into energy use and enable targeted optimizations.

Conclusion:

As the digital era continues to evolve, the data center industry’s energy consumption trends demand attention. Recognizing efficiency challenges and adopting strategic measures are crucial to minimizing environmental impact while meeting growing technological demands. Through collaborative efforts, the industry can forge a sustainable path toward a greener future.