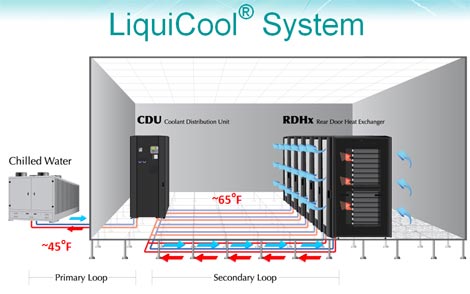

Denmark and Switzerland’s national meteorological services expanded their computing capabilities to supercomputers with heat exchangers built into the rack. This is known in the industry as Rear Door Heat Exchangers (RDHx). It can solve the problems related to high density, high compute power racks.

However, you don’t need a supercomputer to justify liquid cooling. Heat exchangers disperse heat in server cabinets and minimize the required cooling from computer room air handling (CRAH) systems. Technology advances are causing data center managers who dismissed them as risky to take a second look.

For any data center that utilizes 20 kW per hr or more per rack, RDHx cooling systems are employed for dense server settings. High-density data center cooling is a relative subject today. Massive, power-hungry GPU clusters are required to train Machine Learning algorithms. They are also found in Bitcoin mining farms. This increases data center power density beyond the typical 3kW to 6kW per rack.

Heat Removal

RDHx systems are proven at eliminating heat for intensive computing workloads. Thus, making them effective in cutting operational costs.

RDHx systems are proven at eliminating heat for intensive computing workloads. Thus, making them effective in cutting operational costs. Run Cool, Run Fast

Air, Water, and Coolant

Advantages of RDHx Cooling

Photo Credit: www.renewableenergyworld.com

RDHx Is not for Everybody

For high-performance computer platforms, this cooling technique is the best. But giant firms like Google are not adopting this technology since they do not need dense infrastructures. In those cases, “good enough” computing is enough.

Colocation provider Cosentry sites other factors about their decision of not using RDHx in its facilities. RDHx systems, do not give the flexibility Cosentry need when laying out the data center floor. This is according to Jason Black, former VP of data center services and solutions engineering and currently VP and GM at TierPoint.

LBNL, in contrast, piped chilled water for its RDHx cooling system underneath its raised floors using flexible tubing with quick disconnect fittings. Alternatively, overhead piping could have been used.

Another issue is ensuring security. “Mechanical systems need at least quarterly maintenance,” says Black. The mechanical hall in Cosentry data centers allows workers to work without having contact with clients’ servers. “These security methods would be nullified by rear door heat exchangers,” Black argues.

Another issue is ensuring security. “Mechanical systems need at least quarterly maintenance,” says Black. The mechanical hall in Cosentry data centers allows workers to work without having contact with clients’ servers. “These security methods would be nullified by rear door heat exchangers,” Black argues.

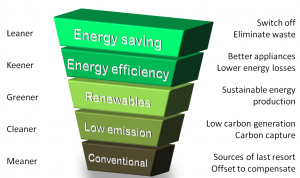

Cooling in the Future

Photo Credit: www.scientific-computing.com

The industry develops newer, higher-tech cooling methods. And the feasibility of RDHx for normal computer operations is becoming less of a discussion topic. Server cooling may be handled at the chip level in the not-too-distant future. Liquid-cooled chips are being developed by manufacturers to disperse heat where it is generated. This allows for more compact board and server designs.

Checklist

High-performance data centers use back door heat exchangers to boost performance, increase density, and lower cooling costs before quantum wells and liquid-cooled chips become ubiquitous.

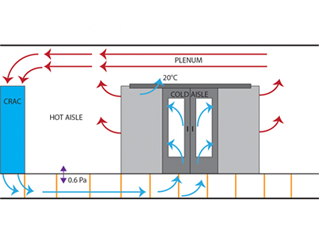

LBNL recommends blanking panels in server racks to avoid hot air from damaging the components. It is also suggested that elevated floor tile patterns be evaluated to ensure that air is directed where it needs to be. And also for an increase in data center setpoint temperature. An energy monitoring and control system is necessary. This is to keep track of the system and make modifications to enhance performance.

When heat exchangers are employed in server cabinets, ensuring hot aisle/cold aisle containment is less necessary, though that configuration may still be beneficial. According to the LBNL advisory, “using an RDHx can substantially lower server outlet temperatures to the point that hot and cold aisles are no longer relevant.” However, in most cases, CRAH units remain in place and are supplemented with RDHx systems.

Monitoring the hot aisle or cold aisle containment is not necessary when using heat exchangers in server cabinets. though that configuration may still be beneficial. According to the LBNL advisory, “using an RDHx can lower server outlet temperatures to the point that hot and cold aisles are no longer relevant.” In most cases, CRAH units remain in place and are supplemented with RDHx systems.

Check for air gaps after installing RDHx cooling systems. RDHx doors do not always fit the racks as they should. Seal any gaps around cabinet doors with blanking panels to improve efficiency. It will also help to monitor rack temperature outflows before and after installing the heat exchangers. Keep an eye on the rate of flow through the system to make sure the RDHx is working to see whether liquid flow rates and server temperatures are related. To avoid condensation, make sure the coolant temperatures at each door are above the dew point and inspect the system for leaks on a regular basis.

Monitoring for RDHx Cooling System

AKCP REAR-DOOR HEAT EXCHANGER WIRELESS MONITORING

It’s necessary to have a mechanism for monitoring and controlling the overall flow rate provided to all of the heat exchangers. This can be a separate flowmeter incorporated into the flow loop or a flowmeter within the coolant distribution unit (CDU). To ensure that water specifications are being met and that the optimum heat removal is taking place, monitoring the temperature of the coolant is important.

Wireless Water Distribution Control

Wireless, remote monitoring, and control of motorized ball valves in your water distribution network. Check status and remotely actuate the valves. Receive alerts when valves open and close, or automate the valve based on other sensor inputs, such as pressure gauges or flow meters. works with all Wireless Tunnel Gateways.

Wireless Cabinet Thermal Map

Wireless thermal mapping of your IT cabinets. With 3x Temperature sensors at the front and 3x at the rear, it monitors airflow intake and exhaust temperatures, as well as providing the temperature differential between the front and rear of the cabinet (ΔT) Wireless Thermal maps work with all Wireless Tunnel™ Gateways.

Thermal Maps are integrated with AKCPro Server DCIM software in our cabinet rack map view.

Wireless Current Meter

3x AC Voltage Inputs up to 487 VAC with 4x current transformer inputs, up to 50A max per phase. Monitor power with billable grade accuracy over Wireless Tunnel™ network with reports and alerts. Works with all Wireless Tunnel™ Gateways.

Conclusion

RDHx cooling can be a strategic piece of data center hardware. It’s an expensive solution to a minor problem but it depends on the data center if it’s going to be worth it or not. Before considering RDHx, think about current and future needs and know what you’re trying to achieve. That will determine whether RDHx cooling is right for your organization now, or in the future.

Resource Links:

https://teamsilverback.com/how-rear-door-heat-exchangers-solve-the-high-density-data-center-problem/

https://ieeexplore.ieee.org/document/7891916

https://www.missioncriticalmagazine.com/ext/resources/whitepapers/shlomo-WP-RDHx-Cost-Effective-Alternative-to-CRAH-120811.pdf

https://www.datacenterdynamics.com/en/news/how-the-rack-is-changing-the-cooling-game/