Overcool or Overheat…

“Where? It’s a big data center.”

Data centers have historically been overcooled to ensure uptime, but the trend is shifting towards more efficient cooling architectures. This article explores ASHRAE’s recommended temperature range for data centers and the benefits of optimizing temperature for energy efficiency.

Importance of Optimizing Data Center Temperature

Traditional Data Centers are over cooled, focussing on uptime. This is because they have limited or poorly designed containment, little understanding of the air flows, air temperatures and air pressures at key points in the heating and cooling cycle.

The trend has been towards more efficient cooling architectures. These typically use hot or cold aisle containment.

So what temperature should you be aiming for in your data center and where should you be measuring this temperature?

Since 2005 ASHRAE (American Society of Heating, Refrigerating and Air-Conditioning Engineers) recommends operating anywhere between 18°C to 27°C (64.4°F – 80.6°F) with a humidity between 40-55%. This gives a starting point, but how do we decide where within this range to operate, and where to place sensors?

Overview of ASHRAE Temperature Recommendations for Data Centers

The safest option is to aim for 18°C. However, the safest option is not the most energy efficient. Modern servers operate at higher temperatures, such as 23°C – 24°C (73.4° – 75.2°F) server inlet temperature. There may be a slightly reduced lifespan of the components but this will be more than offset by the energy saving.

Data centers can save 4-5% in energy costs for every 0.5°C (1°F) increase in server inlet temperatures. Going from 18°C to 24°C (64.4°F to 75.2°F) could save up to 43% of OpEx. This also reduces the carbon footprint of the data center, and the amount of water you need. Increasing the capacity of your existing facility without needing to outlay CapEX for additional cooling equipment is also possible.

There is a trade off that comes with this. There is less margin for error. When you run your data center at an average of 18°C, a failure to properly measure server inlet temperatures is not critical. The closer to the upper temperature limits you run, the more you save in energy but the higher your risk of thermal overload.

Factors Influencing Data Center Temperature

The below factors can have an impact on your server rack inlet temperatures and energy consumption :-

- The local climate

- The number of racks, other equipment and how much heat they produce

- The size and shape of the room

- Power dissipated

- Cooling system design

If any one of these factors changes, your Data Center may be operating at too high or too low a temperature at some location in your Data Center.

Benefits of Optimized Data Center Temperature

The closer to the upper limit of ASHRAE recommended temperatures you operate, the more important a comprehensive monitoring system becomes.

Monitoring the temperature of your data center offers several key benefits:

- Minimizes downtime. Proper monitoring of temperatures helps avoid costly downtimes due to thermal overload.

- Detailed analysis of hotspots and cold spots. Identify problem areas before they are critical. Locate where you have stranded capacity and overcooling.

- Safely operate at the upper limits of ASHRAE recommended temperatures.

Example of sectional view of containment digital twin in AKCPro Server

The Role of Temperature Monitoring in the Data Center

There are many options when it comes to monitoring solutions for the data center. From a single temperature sensor in the room, to detailed thermal mapping of the rack inlet and outlet temperatures, with ∆T calculations.

Uptime Institute recommends a minimum of 3 temperature sensors on at least every other rack. The sensors are located at the top middle and bottom of the front of the rack. New technologies such as sensorCFD™ from AKCP integrate live sensor data and CFD (computational fluid dynamics) and real time analysis. This is useful in understanding airflows and temperature distribution.

Here are some important features to look for when selecting a monitoring system for your data center.

- 24/7 remote monitoring: This feature allows you to monitor your data center’s temperature in real time.

- Automated alerts. Let you know when temperatures in the data center are outside of recommended ranges. Take action before issues arise.

- Data logging capabilities:. Storing your data on a regular basis to spot long-term temperature trends and make adjustments.

- Integration with DCIM platforms via SNMP or cloud platforms with MQTT.

By using these features, data center professionals can rest assured that their facilities are running at peak efficiency and with maximum safety.

Implementing DCIM Monitoring Software

To ensure your data center runs at optimal conditions, you’ll need to invest in monitoring software. This will allow you to maintain more accurate readings and adjust the temperature as needed.

With the right monitoring software, you can:

- Monitor temperature conditions in real-time and receive alerts when specific thresholds are crossed

- Accurately record and capture temperature fluctuations for future reference or analysis

- Identify changes in temperature so you can take action before a critical situation arises

- Optimize cooling systems performance by preventing system overloading

- Save energy costs by avoiding unnecessary cooling system operation

ASHRAE recommends implementing a comprehensive, integrated monitoring system. This can automate control of cooling systems and help maximize efficiency. It should generate detailed reports of past events and provide near real-time data on current conditions. With this information operators can make informed decisions.

Case Study

A top healthcare provider in the USA recently implemented thermal map sensors from AKCP on every rack in their data center. The sensors are monitored through Sunbird DCIM via SNMP. Sunbird provides data analytics, alarming and visualization tools to show hot and cold spots in the data center. This has allowed the data center to get an insight as to where they are wasting energy through overcooling, release stranded capacity by increasing server loads and safely manage hotspots when operating the data center at elevated temperatures.

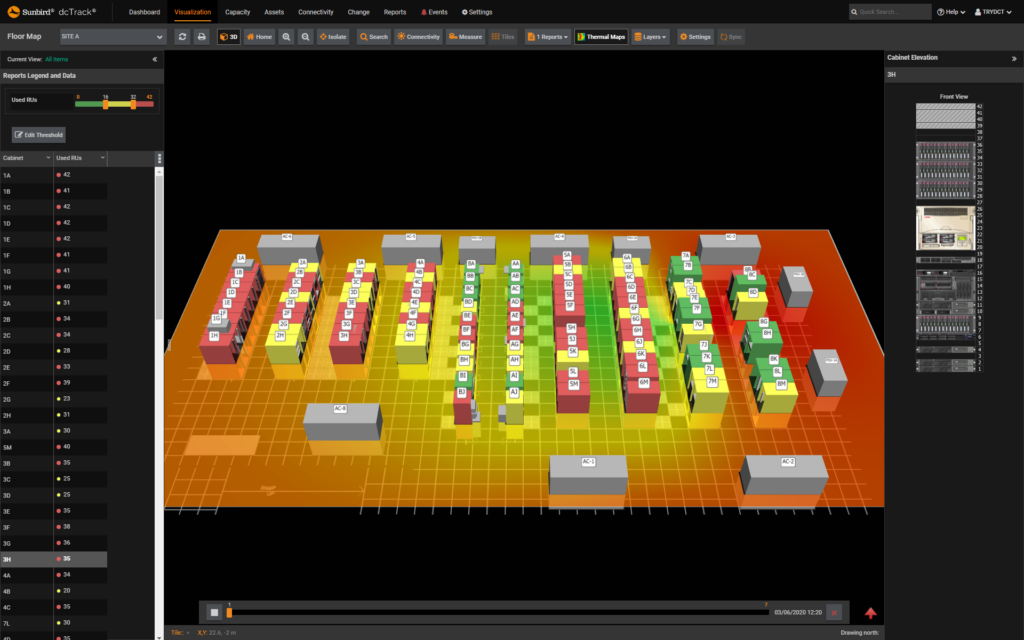

Example of thermal maps displayed in Sunbird DCIM

Thermal map sensors consist of 3x temperature sensors at the front, and 3 at the rear of every rack, giving you measurements of rack inlet, outlet and ∆T. DCIM software from Sunbird uses this sensor data to create heatmaps of the data center.

The healthcare provider discovered that they were overcooling servers throughout their data center, and could safely increase their data center operating temperature without creating hot spots. They successfully reduced their energy use by 18% while maintaining safe operating conditions for their servers. Based on the results achieved, they are preparing to roll out the same setup at their second data center facility.