Data centers and data center HVAC systems are mission-critical, energy-intensive infrastructures that run 24 hours a day, seven days a week. They provide computing services that are critical to the everyday operations of the world’s most prestigious economic, scientific, and technological institutions. The amount of energy utilized by these centers is expected to be 3% of total global electricity consumption, with a 4.4% annual growth rate. Naturally, this has a huge economic, environmental, and performance impact, making cooling system energy efficiency one of the top priorities for data center designers, ahead of traditional issues like availability and security.

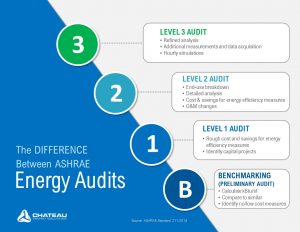

The Right Standards To Follow

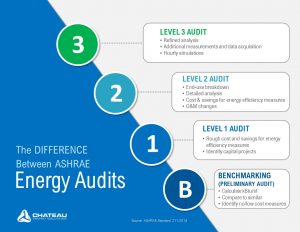

This was a difficult undertaking until recently since the industry standards for evaluating the energy efficiency of data centers and server facilities were inconsistent. In 2010, power usage effectiveness (PUE) was created as a guiding norm for data center HVAC energy efficiency measures. However, it was used as a performance meter rather than a design standard, and it still didn’t address important design elements, therefore the problem persisted.

New Energy Efficiency Standard ASHRAE 90.4

Photo Credit: chateaues.com

As a result, the American Society of Heating, Refrigerating, and Air-Conditioning Engineers (ASHRAE), one of the key organizations in charge of producing rules for various areas of building design, decided to create a new standard that would be more practical for the data center business. The ASHRAE data center standards, ASHRAE 90.4, have been in development for several years and were published in September of 2016, providing the data center community with a much-needed standard. This new data center HVAC standard, according to ASHRAE, “establishes the minimum energy efficiency standards of data centers for design and construction, the formulation of an operation and maintenance plan, and the exploitation of on-site or off-site renewable energy resources,” among other things.

The new ASHRAE 90.4 standard includes suggestions for data center design, construction, operation, and maintenance. This ASHRAE data center standard specifically addresses the particular energy requirements of data centers vs normal buildings, incorporating the more significant components and risks associated with data center operation. Unlike the PUE energy efficiency metric, the ASHRAE 90.4 calculations are based on typical design components. Organizations must calculate efficiency and losses for multiple system components and combine them into a single figure that equals or falls below the published maximum limitations for each climate zone.

Data Center Efficient Design For Energy Consumption

It might be difficult to design a new data center facility or make changes to an existing one to increase cooling efficiency. The following are some design techniques for improving a data center’s energy efficiency:

-

Environmental conditions are used to determine where data centers should be located.

-

Infrastructure topology influences design decisions.

-

Adapting the most effective cooling strategies.

An important opportunity for the HVAC design engineer to reduce energy consumption is to improve the data center cooling system layout. Designers and engineers use a variety of cooling solutions to save energy, including:

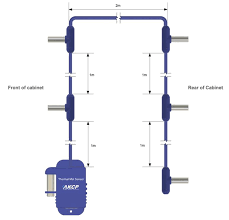

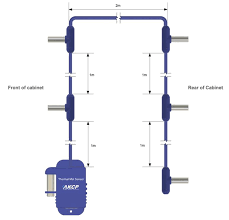

The cold aisle is directed to the front of the server racks, while the hot air is directed to the back. The primary purpose is to control airflow in order to save energy and money on cooling. The graphic below depicts the airflow motions in a data center’s cold and hot aisles.

Keeping the cold and hot air distinct within the room and removing hot air from the cabinets. The diagram below depicts the passage of cold and hot confinement airflow in detail.

Liquid cooling systems are a different approach to removing heat from a system. Air conditioners or refrigerants with cold water close to the heat source are used in this method.

One of the sustainable technologies utilized in data centers is green cooling. Simply opening a data center window with filters and louvers to allow natural cooling techniques should suffice. This method saves a significant amount of money and time.

The Capacity of High-Density IT Systems

Photo Credit: journal.uptimeinstitute.com

The industry group’s climatic recommendations for data centers have been expanded to include corrosive, high-density servers.

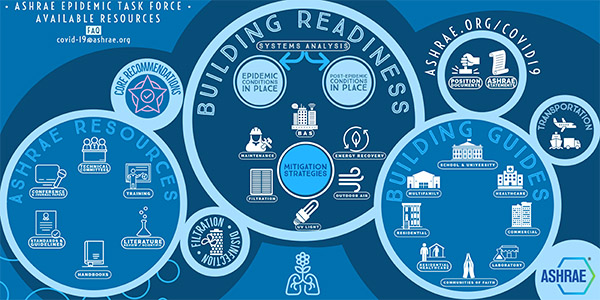

Technical Committee 9.9 of ASHRAE published an update of its Thermal Guidelines for Data Processing Environments earlier in 2021. The update recommends significant modifications to data center thermal operating envelopes, including the inclusion of pollutants as a factor and the introduction of a new class of high-density computing IT equipment. In some situations, the new advice may lead operators to adjust not only operating methods but also set points, which might have an influence on both energy efficiency and contractual service level agreements (SLA) with data center services providers.

Many data center operators, on the other hand, do not frequently check for corrosion since they do not assess gaseous pollutant levels. Even if temperature and RH are within goal parameters, if strong catalysts are present but undetected, failure rates may be higher than expected. Worse, lowering supply air temperature to prevent breakdowns may actually make them more likely. If operators do not make reactivity coupon measurements, ASHRAE recommends an RH 50% limit. Surprisingly, it also allows for following the criteria outlined in its earlier update, which specifies an RH limit of 60%.

ASHRAE For New Data Center Model

Photo Credit: www.datacenterdynamics.com

Better performance heat sinks and fans that could keep components within temperature limits across the generic prescribed envelope, according to ASHRAE, just do not fit in some dense systems. The IT vendor must specify what constitutes a system class H1 (containerized) because ASHRAE does not.

These new envelopes have some potentially far-reaching effects. Over the last decade, operators have built and equipped a substantial number of buildings based on ASHRAE’s earlier recommendations. Many of these relatively new data centers use less mechanical cooling and more economization to take advantage of the suggested temperature bands. By combining the use of evaporative and adiabatic air handlers with improved air-flow design and operational discipline in many locations — including Dublin, London, and Seattle — it is even possible for operators to fully eliminate mechanical cooling while staying within ASHRAE criteria.

Uptime will be watching how colocation providers respond to this challenge, given their standard SLA is mostly based on the ASHRAE thermal regulations. Given the increasing power of semiconductors with each generation, what may be deemed unusual now may become routine in the not-too-distant future. Facility managers could utilize direct liquid cooling for high-density IT as a workaround.

AKCP Monitoring The ASHRAE Standard

AKCP Thermal Map Sensor

In monitoring the data center and server rooms as per the ASHRAE Standards, recommends that there should be three sensors per rack. Mounted at the top, middle, and bottom of the rack to more effectively monitor the surrounding temperature levels.

For hot or cold aisles, the use of a sensor at the back of the cabinet could provide valuable insights. This can dictate the most effective airflow containment strategies for each aisle. Finally, if managers want to further reduce downtime and improve efficiencies, they should also track rack cabinet exhaust metrics, server temperatures, and internal temperatures. The latter readings can help response personnel more effectively address issues in real-time before they cause a costly outage.

As part of ASHRAE best practices, data center managers should also monitor humidity levels as well. Just as high temperatures can increase the risk of downtime, so too can high humidity create increased levels of condensation and thus a higher risk of electrical shorts. Conversely, when humidity levels drop low, data centers are more likely to experience Electrostatic Discharge (ESD). To mitigate the latter issues, ASHRAE best practices state that managers should avoid uncontrolled temperature increases that lead to excessive humidity levels. Humidity levels within server rooms and across the data centers should stay between 40% and 60% Relative Humidity (RH). The latter range will help to prevent ESD, lower the risk of corrosion, and prolong the life expectancy of equipment.

AKCP Thermal Map Sensor

Thermal Maps are easy to install, come pre-wired, and are ready to mount with magnetic, cable ties, or ultra-high bond adhesive tape to hold them in position on your cabinet. Mount each sensor on the front and rear doors of your perforated cabinet so they are exposed directly to the airflow in and out of the rack. On sealed cabinets, they can still be mounted on the inside and give the same monitoring of temperature differential between front and rear, and ensure that airflow is distributed across the cabinet.

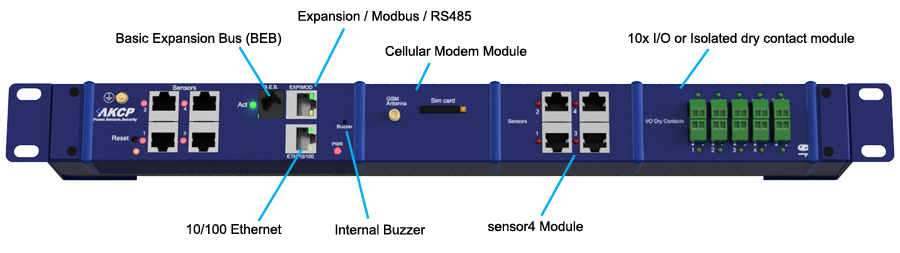

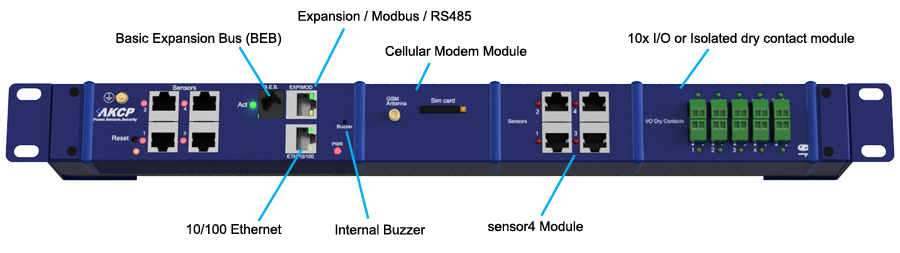

AKCP SensorProbeX+

Monitor up to 16 cabinets from a single IP address. With a 16 sensor port SPX+, you consume only 1U of rack space and save costs by having only one base unit. Thermal map sensors can be extended up to 15 meters from the SPX+.

Reference Links:

https://www.simscale.com/blog/2018/02/data-center-cooling-ashrae-90-4/

https://www.ashrae.org/technical-resources/bookstore/datacom-series

https://blog.stulz-usa.com/ashrae-regulations-data-centers

https://www.datacenterdynamics.com/en/opinions/new-ashrae-guidelines-challenge-efficiency-drive/