A Data Center is a large, energy-hungry facility. One of its largest energy-consuming functions is its HVAC system. This is responsible for ventilation and data center cooling. Data centers provide computing functions that are vital to the daily operations of businesses, economic, scientific, and technological organizations around the world. It is estimated that these facilities consume around 3% of the total worldwide energy use. This statistic also has an annual growth of 4.4%. This is why it is a priority in the industry to implement energy-efficient equipment and strategies. In this article, we will go through the standards set by ASHRAE 90.4 to become an energy-efficient data center.

Which Standards to Follow?

For the longest time, there are no standards to follow in the industry. It has been challenging for engineers to design energy-efficient data center facilities. There have been some attempts to establish a standard and Power Usage Effectiveness was introduced. However, this never addresses the physical design issues and ended up as a metric of performance rather than a design standard.

ASHRAE 90.4: The Data Center Energy Efficiency Standard

This is why the American Society of Heating, Refrigerating, and Air-Conditioning Engineers (ASHRAE) developed a practical standard for all data centers to follow. ASHRAE is the organization responsible for developing guidelines in various aspects of building design.

ASHRAE 90.4 was published in September 2016. This had been in development for several years to bring a much-needed standard to the data center community. According to the organization, it establishes the minimum energy efficiency requirements, recommended design, construction methods, and the overall utilization of renewable energy sources.

Basically, ASHRAE 90.4 addresses the unique energy requirements, it also integrates the more critical aspects and risks in data center operations. Opposed to the PUE energy efficiency metric, ASHRAE 90.4 calculations are based on the components of the design. Operators or organizations have to calculate their energy efficiency and losses for different elements and generate the total. The total must be equivalent to or less than the maximum figures for each climate zone.

Difference Between ASHRAE 90.4 and PUE

Power usage effectiveness was introduced to be the primary metric used by the data center industry. It finally became an ISO standard in 2016. ASHRAE 90.1 is often incorporated in many state or local building codes in the US. But due to some unfit technicalities, 90.4 was introduced for Data Center Energy Standard.

PUE did not address the issues regarding the energy usage standard. It also tends to be a reference in colocation SLA contracts regarding performance or energy costs. On the other hand, ASHRAE 90.4 is a design standard to be used when submitting plans for approval prior to constructing a data center facility. It also tackles 10% or greater facility capacity upgrades.

One of the biggest differences between PUE and ASHRAE 90.4 is the data measurement and energy calculation. The 90.4 standard is more complicated than the PUE metric. The downside with PUE is that it does not contain a geographic adjustment factor. Cooling system energy often stands for a significant percentage of energy usage in a facility. Similarly constructed data centers would reflect a different PUE if one had a different geographic location.

What is your PUE? Check it out using our PUE calculator

Location is also a factor in meeting the cooling system energy compliance as indicated in Mechanical Load Component (MLC). There is an included US climate zone in ASHRAE 169 with its own respective maximum annualized MLC compliance factor.

How Computational Fluid Dynamics Can Help You Comply with ASHRAE 90.4

Improving Data Center Cooling Systems with CFD

CFD helps the data center in modeling cooling airflow through the server. Modeling the airflow from out of the CRAC and CRAH and into the raised floor, through ventilated floor tiles and into the cold-aisle, into ITC cabinets, out of cabinets into the hot-aisle and the route back for the heated air to the source of the cooling.

CFD is also used to predict hot spots in the room. Especially if the provision of cooling air is inadequate. It is also useful in decision making on where’s the best area new equipment could be installed.

A little change in the cooling system design strategies or floor variation might affect the result. Thus, the change in efficiency, which later on might cause hotspots or alter the required infrastructure in the design. Computational fluid dynamics (CFD) in line with ASHRAE data center standards, is a method that can be used to evaluate new designs or alterations before implementation.

Strategies to Reduce Data Center Energy Consumption

Designing an energy-efficient data center or changing the layout of an existing facility to maximize its cooling system is a challenge. Here are some guidelines and strategies to reduce the energy consumptions of a data center:

- Air conditioners and air handlers

The most common types are air conditioner (AC) or computer room air handler (CRAH) units that blow cold air in the required direction to remove hot air from the surrounding area.

- Hot aisle/cold aisle

The cold air is passed to the front of the server racks and the hot air comes out of the rear side of the racks. The main goal here is to manage the airflow in order to conserve energy and reduce cooling costs.

- Hot aisle/cold aisle containment

Containment of the hot/cold aisles is done mainly to separate the cold and hot air within the room and remove hot air from cabinets.

- Liquid cooling

Liquid cooling systems provide an alternative way to dissipate heat from the system. This approach includes air conditioners or refrigerants with cold water close to the heat source.

- Green cooling

Green cooling or free cooling is one of the sustainable technologies used in data centers. This could involve simply opening a data center window covered with filters and louvers to allow natural cooling techniques. This approach saves a tremendous amount of money and energy.

Data Center Efficiency Checklist

A well-planned or retrofitted data center can easily accomplish an energy-efficient data center. Accommodating the following could help the data center in saving energy:

- Reduced IT loads via consolidation and virtualization

- Hot aisle and cold aisle implementation via blank panels, curtaining, equipment configuration, and cable entrance/exit ports

- Installation of an economizer (air or water) and evaporative cooling (direct or indirect)

- Indirect liquid cooling systems, centralizing of the chiller plant, moving chilled water close to the server, and raising chiller water set-points to achieve a 1.4% reduction in chiller energy use per degree of temperature reduction

- Reuse of data center waste heat

- Installation of a reliable monitoring system to monitor if your environmental factors are within standards.

AKCP Monitoring Solutions

AKCP THERMAL MAP SENSORS

In monitoring the data center and server rooms as per the ASHRAE Standards, recommends that there should be three sensors per rack. Mounted at the top, middle, and bottom of the rack to more effectively monitor the surrounding temperature levels.

For hot or cold aisle, the use of a sensor at the back of the cabinet could provide valuable insights. This can dictate the most effective airflow containment strategies for each aisle. Finally, if managers want to further reduce downtime and improve efficiencies, they should also track rack cabinet exhaust metrics, server temperatures, and internal temperatures. The latter readings can help response personnel more effectively address issues in real-time before they cause a costly outage.

As part of ASHRAE best practices, data center managers should also monitor humidity levels as well. Just as high temperatures can increase the risk of downtime, so too can high humidity create increased levels of condensation and thus a higher risk of electrical shorts. Conversely, when humidity levels drop low, data centers are more likely to experience Electrostatic Discharge (ESD). To mitigate the latter issues, ASHRAE best practices state that managers should avoid uncontrolled temperature increases that lead to excessive humidity levels. Humidity levels within server rooms and across the data centers should stay between 40 percent and 60 percent Relative Humidity (RH). The latter range will help to prevent ESD, lower the risk of corrosion, and prolong the life expectancy of equipment.

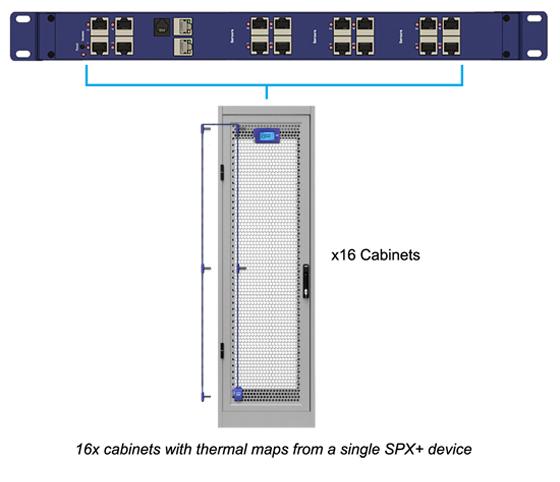

SPX+ Base unit for Thermal Map Sensors

AKCP Thermal Map Sensor

Thermal Maps are easy to install, come pre-wired, and ready to mount with magnetic, cable ties, or ultra-high bond adhesive tape to hold them in position on your cabinet. Mount each sensor on the front and rear doors of your perforated cabinet so they are exposed directly to the airflow in and out of the rack. On sealed cabinets, they can still be mounted on the inside and give the same monitoring of temperature differential between front and rear, and ensure that airflow is distributed across the cabinet.

Monitor up to 16 cabinets from a single IP address. With a 16 sensor port SPX+, you consume only 1U of rack space and save costs by having only one base unit. Thermal map sensors can be extended up to 15 meters from the SPX+.

Reference Links:

https://www.esmagazine.com/articles/99529-incorporating-standards-power-distribution-backup-generators-and-more-into-your-data-center-design

https://www.cadalyst.com/testing-analysis/sustainability/virtual-facility-simulation-cuts-data-center-cooling-costs-13291

https://www.azbil.com/corporate/technology/techne/techne15_c1.html

https://www.ashrae.org/technical-resources/ashrae-journal/featured-articles/optimizing-cooling-performance-of-a-data-center-using-cfd-simulation-and-measurements