Initiated in 2011, the open compute project is a vision that they hope will become the standard for the future. To realize this objective, the open compute project (OCP) welcomes a collaborative community to develop and redesign technologies that address the unprecedented demand in computing infrastructure.

The problem faced by Facebook in 2009 became the conduit for the redesign efforts. With efficiency gains and cost reduction as solid outcomes, the OCP was formed as a technology revolution in 2011.

The basic tenets of the OPC are based on efficiency, scalability, openness, and impact. With this in mind, stakeholders freely share ideas that lead to more innovation. With this, tackling complex problems becomes more manageable. The OCP hopes to create a technological commodity that fits all usage and stakeholder.

How Does Open Compute Projects Affect Data Centers?

Personal computing has evolved over the years. The rise in demand for better networking and communication has catapulted computing to another level. Its utilization can stem from media to shopping to gaming. Such usage will primarily require remote servers to function. This paves the way for the proliferation of data centers to handle the computation and storage capacity needs.

As data demand rapidly rises, data centers must keep pace. This is what happened to Facebook in 2009. As the site gained unprecedented users, computational requirements also ballooned. Initially, Facebook leased space in colocation data centers, filling them with their own servers. However, due to the sudden rise in social media platform usage, they needed to reduce the costs of the operation, that meant bringing data centers in house. This is where the evaluation of alternative but efficient data center designs came in. The rationale behind the design is simple, develop the most efficient servers and data centers that can carry out the current and future data demands.

Open Compute Project Principles

- Electrical Design

Photo Credit: www.nextplatform.com

A crucial decision from the OPC project was in the area of data center power distribution. In a typical data center there are power conversions, with AC power being brought in from the electrical grid, converted to DC as it goes through UPS systems, then converted back to AC for distribution to the racks. The rack mounted equipment again converts this AC back to DC for its use. All these conversions result in in-efficiencies. Instead OPC has opted for bringing DC voltage directly into the racks, minimizing the number of conversions made.

- Thermal Design

The aim behind thermal management in line with the open compute principles is to use 100% outside air (free cooling). While this comes with an array of pros and cons, closer investigation outweighs the risks. Outside air cooling has a significant advantage compared to chillers. But data center locations are also a primary consideration when instituting this kind of air economization.

Another significant consideration was to allow the data center’s temperature and humidity limits to go beyond the ASHRAE recommendation. By doing so, hot aisles in the infrastructure are contained. Designing a drop-ceiling plenum allows for efficient air return. As a result, it negates the potential chance of hot and cold air mixing.

- Building Design

The thermal management improvements result in a change in data center building requirements. The integration of a complete outside air economization negates additional pipe works usually installed under raised access flooring. With full outside air circling the data center, concrete slab grade floors are sufficient for a data center. The outturn is cost savings. Having no access floor, the data center can be designed with increased building height. This way, humidity is controlled and eliminates static dissipation flooring.

It is important to note that the ceiling should use lightweight composite concrete. This decreases the load and serves as a critical medium for environmental protection. Structural changes to promote more open spaces can also be a point to note. The lighting system should use power-over-ethernet. This can simplify cabling word reduce power input, of course. It is essential to integrate diagnostics for every present fixture. Enabling temperature monitoring and occupancy sensors is one way to optimize cooling efficiency.

Fixing the distance between racks and battery cabinets are also key to better airflow circulation. Columns aligning at the back of battery cabinets will not impede airflow, especially in the servers’ back.

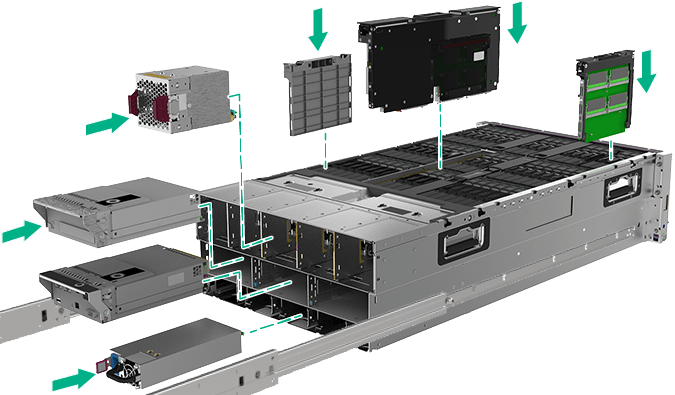

- Server Design

Because servers are a crucial part of the computing process, valuable choices can be derived per the open compute project principle. Customization of servers may be one component that needs robust planning. But taking into account other features will also be a supplementary condition.

As such, server design revolves into the following integral principles:

- Efficiency over aesthetics.

- Enhance high-impact use cases and not settle for a blanket approach through all applications.

- Promote serviceability but limit the activity in the front of the server.

Facebook made some steps to optimize its server designs to depict these principles.

- Removing extra components from the motherboard that is not relevant to application use increased efficiency, facilitated better airflow, and prevented additional costs. Developing low-cost and low-power alternatives to sustain server performance through customization is vital.

- All cables up for service maintenance (including network and power) are situated in front. They have a minimum length as required providing enough access for maintenance activities. This specification limited clutter and made the service easier to manage.

- Arranging three columns holding 30 servers with two 48-port switches significantly improved the server-to-switch ratio. The switches are strategically placed in a quick-release tray allowing a standard 19″ / 1U equipment to access it.

Enhancing Design Principles

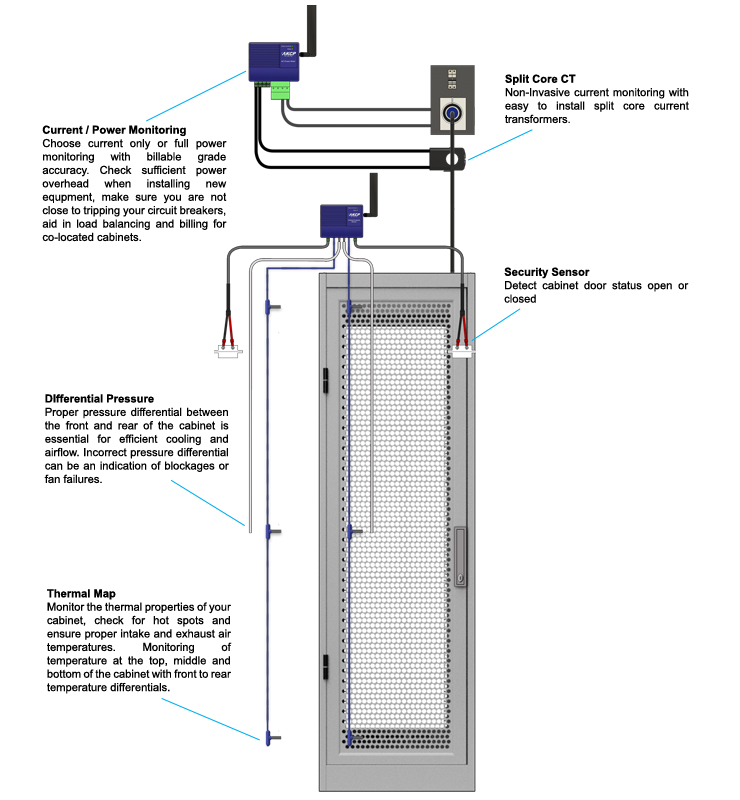

AKCP Wireless Smart Rack

The design changes are all for the betterment of a data center’s performance. These specifications are thoroughly evaluated and are integrated to increase efficiency. One crucial aspect of an open compute project is enabling scalability and determining standards in line with efficiency gains.

As with many scaling procedures and standardization, monitoring is a crucial facet. Datacenter monitoring solutions have been complementary support to OCP endeavors. A robust monitoring solution can only sustain the drive for excellence. This carries through consistency in design approaches.

Monitoring solutions allow engineers to monitor data center components. With a robust monitoring strategy, managing and regulating specific components are hasten and easily attainable. AKCPro server is a world-class central monitoring and management software that works within the tenets of OCP. The server is suitable for a wide range of monitoring applications. It can monitor data centers in single building infrastructure or remote sites.

Deploying AKCP wired and wireless sensors to specific components in the data center is an essential monitoring strategy. The ACKPro server can configure and monitor these deployed sensors. As such, integrating environmental, security, power, and access control is easily managed through the server. AKCP also offers a Rack+ solution essential in monitoring rack or aisle systems. Any changes made in a data center environment can be centrally monitored using the Rack+ system regardless of design.

The monitoring solutions from AKCP align with how OCP views the redesign of hardware technology. Keeping pace with innovation, these monitoring solutions are practical tools to optimize efficiency and reduce cost. They are scalable and have specific use at the same time. Ultimately, enabling better outcomes for any technological development, especially in a data center.

As customization is one ingredient in the advancement of OCP, supplementing it with solutions to ensure efficient outcomes is only logical. In the end, if the scale justifies customization, it is always a good idea to validate through data. This is where data collected from monitoring solutions are a vital resource.

The principle of OCP is simple: efficient, scalable, open, and impactful. An open compute-ready data center will need to achieve such. But only with great support from an open compute-ready monitoring solution can we drive for great strides forward. Onward innovation excellence!

Reference Link:

www.opencompute.org/about

www.citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.259.8834&rep=rep1&type=pdf